A flat model device driver for OS/2:Chapter 1. - Introduction

| A flat model device driver for OS/2 |

|---|

Chapter 1 - Introduction

Problem

OS/2 device driver development is at a standstill. The OS/2 device driver model was derived from a DOS device driver model. It retains much of the 16-bit architecture of the 16-bit Intel 80286 processor on which was first implemented in OS/2 1.0. Since that time, the mainstream Personal Computer (PC) processor has grown from a 16-bit architecture to a 32-bit architecture. Since the newer processors are backward compatible, the older driver software still runs without change. However, 16-bit compilers for OS/2 are no longer available, stunting the growth of new driver development. The mixed mode of 16-bit and 32-bit interfaces makes driver development difficult, and programmers spend too much time creating specialized code to handle the address conversions.

Purpose

The purpose of this study is to identify the amount of work necessary to convert existing 16-bit device drivers to 32-bit device drivers, and to provide a framework for new device driver development using 32-bit tools. It should be noted that this proposal describes the changes necessary to convert existing 16-bit device drivers to 32-bit, and to allow new 32-bit drivers to co-exist with the older model. While it would have been easy to design a new model based on current technology such as the I2O initiative, the goal of this proposal was to preserve the investment in current device driver development and training.

Importance of Study

This study will help determine if converting existing 16-bit device drivers to 32-bit is feasible, and if the return on programming investment is worth the effort. The data gathered, along with the conclusions, will determine the level of funding and programming resources to allocate.

Scope of Study

This proposal suggests a new device driver model for the OS/2 operating system, and as such, contains several design suggestions and criteria that are specific to OS/2. OS/2 is a hybrid system, part 16-bit and part 32-bit. Many of the functions or methods used or presented in this design are specific to the OS/2 operating system, and therefore not necessarily valid or applicable for other operating systems such as Windows NT or Unix.

Rationale of Study

Since the OS/2 device driver development environment can be problematic, we decided that the best way to verify our design was to actually implement it. Many design flaws or omissions occur only under load, in actual operating conditions, especially those issues related to timing, race conditions, or interrupt latency. By using real working device drivers, we felt that we could expose these problems more quickly than any other method.

Definition of Terms

- API

- Application Programming Interface, a definition of what external functions are available to a program and how to call them.

- Arbitrate

- In programming terms, the ability of the device driver to act as a “traffic cop” to grant or deny hardware access to an application.

- Abstract (verb)

- In programming terms, the verb abstract means to “hide the details”. A device driver abstracts or “hides the details” of the low-level device architecture from the application software.

- Device Driver

- The software program that acts as the interface between an application program and a hardware device. The device driver is responsible for converting high-level requests from the application program into low-level commands that the hardware can understand.

- DMA

- Direct Memory Access, a method of transferring data to and from memory by using a specialized piece of hardware.

- Dynamic Linking

- An architecture that allows external references to be resolved at program load time. This results in smaller executables but a slightly longer initial load time.

- File System

- The internal subsystem in an operating system that controls access to hardware devices.

- Flat Model

- A term that describes a 32-bit addressing mode in which the address contains the actual memory page information and offset. The processor hardware decodes the physical page information. Flat pointers can directly access up to 4GB of memory.

- Hardware

- The physical electrical components of the computer system or device interface.

- Legacy Devices

- A term used to denote older devices and their support hardware. These devices are usually ISA bus devices.

- Multiprocessor

- A computer architecture where the computer is equipped with more than one Central Processing Unit (CPU).

- Polling

- A programming method that involves waiting in a tight loop for a particular bit to change state or a particular event to occur. Polling results in inefficient use of the processor cycles because other programs are prevented from running while polling is being performed.

- Protect Mode

- A specific mode of operation for the Intel 80x86 series of processors that provides for hardware-based memory protection and a 32-bit flat memory architecture.

- Real Mode

- A specific mode of operation for the Intel 80x86 series of processors that provides a one-megabyte memory size with no memory protection. MS-DOS runs in real mode.

- Segment

- Offset : A method of memory addressing for the Intel 80x86 series of processors that forms a 32-bit address from a 16-bit selector and a 16-bit offset. The selector is actually an index into a table of memory descriptors that contain the physical page number and access rights of the memory. The offset portion is only large enough to describe a 64K segment of memory.

- Spinlock

- A specialized program loop that prevents other operations or programs in the system for being executed while the special loop is being executed.

- Thunking

- The process of converting 32-bit pointers to their 16-bit equivalents, and 16-bit pointers to their 32-bit equivalents. Thunking from 32 to 16 can be very time-consuming.

Overview of the Study

Early Personal Computer (PC) operating systems such as MS-DOS were single tasking, i.e.; they were capable of executing only one program at a time. Even though these systems were somewhat slow, they were still much faster than the devices they needed to access. Most output information was sent to a line printer and most input data was read from a keyboard. If a program needed to perform input or output (I/O) to one of these devices, the system would effectively remain idle while waiting for the data to be sent or entered. This method of performing I/O, called polling, was very inefficient. Even though the computer was capable of executing thousands of instructions in between each keystroke, it was kept busy while I/O was being performed. If a program needed to print something on a printer, it would send the data one character at a time, waiting for the device to acknowledge that the character was accepted before sending the next character. Since the computer processed the data faster than it could be printed, it would sit idle for much of the time waiting for the printer to do its job. As technology progressed, faster I/O devices became available, but so did faster computers. The computer was at the mercy of the input and output devices it needed to access.

The problem was exacerbated by the fact that each one of these I/O devices required data in a different form. Some devices required data in a serial fashion, bit by bit, while others required data 8 or 16 bits at a time. Some line printers printed on 8 1/2 by 11-inch paper and some on 11 by 14-inch paper. Magnetic tape storage devices used different size tapes and formats, and disk storage devices differed in both the amount and the method of storage.

The device driver solved the problems associated with the different types of devices, and provided a more efficient method of utilizing the processing power of the computer while it was performing input and output operations. The device driver is a small program inserted between the program performing the I/O and the actual hardware device such as a printer or magnetic disk drive. The device driver is programmed with the physical characteristics of the device, and acts as an interface between the program and the device. For example, the device driver for a line printer might be programmed with the characteristics of the printer, such as the number of characters per line or the size of the paper that the device supports. The device driver for a magnetic tape drive might be programmed with the physical characteristics of the tape mechanism, such as the tape format and density. The ability of the device driver to hide or abstract the details of a hardware device is a fundamental concept in the design of today’s operating systems and applications software. Programmers writing software do not need detailed knowledge of the devices that the program may use, and do not have to provide specialized programs to access those devices. For example, the software for an application that needs to send data to a printer can be written the same way no matter what type of printer is installed on the system. The same data can be sent to a high-resolution laser printer or a low-resolution dot-matrix printer with the same results. Old printers can be deleted, or new types of printers added without the need to change the application software.

Device drivers also address the problem of polling. Since the device driver has intimate knowledge of how to deal with the hardware, there is no reason why the application program has to wait around for each character to be printed. It can, for example, send the device driver a block of 256 characters and return to continue executing the application program while the device driver handles the details of sending the data to the device. When the device driver finishes sending all the data, it notifies the application program that it needs more data. The application then sends the next block of data to the device driver for processing. While the device driver is performing the I/O, the application can continue processing data or performing other operations. This results in more efficient use of the system processor.

The use of device drivers became even more important when operating systems such as Windows and OS/2 appeared that could run more than one program simultaneously. In systems that can run many programs at the same time, it is possible that more than one program might try to access a hardware device at the same time. The device driver performs an important function by controlling access to the hardware device. In some cases, such as a printer, the device driver serializes or arbitrates access to the device so that one program’s output does not appear in the middle of another program’s output. Once the printer has begun servicing a program, subsequent attempts to access the printer are refused, and the “busy” indication is sent back to the requesting program. The requesting program can then decide to wait for the printer to become available or to access the printer at a later time.

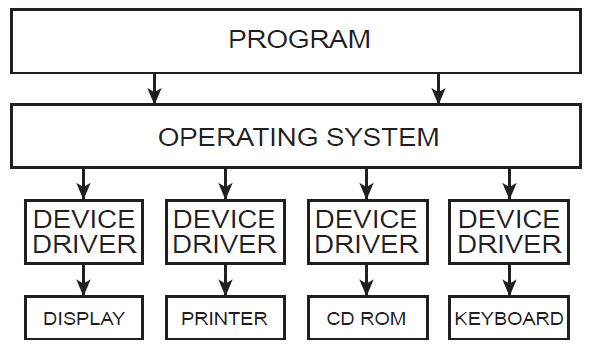

Device drivers are an irreplaceable and critical link between the operating system and the I/O device (see Figure 1-1). They can interact directly with the Central Processing Unit (CPU) and operating system, and in some cases, can allow or block the execution of programs. Device drivers usually operate at the most trusted level of system integrity, so the device driver writer must test the driver code thoroughly to assure bug-free operation. Failures at a device driver level can be fatal causing the system to crash or experience a complete loss of data.

The use of computers for graphics processing has become widespread. It would be impossible to support the many types of graphics devices without device drivers. Today’s hardware offers dozens of different resolutions and sizes. For instance, color graphics terminals can be had in pixel sizes of 800 by 600, 1024 by 768, 1280 by 1024, and as high as 2048 by 2048. Each resolution can support a different number of bits per pixel, or color depth.

Printers vary in dots per inch (DPI), font selection, and interface type. Since all of these formats and configurations are in use, the supplier of a graphics design package that needs to send data to a printer would have to support all of the configurations in order to offer a marketable software package. A graphics application program might direct the output device to print a line of text in Helvetica bold italic beginning at column 3, line 2. Each graphics output device, however, might use a different command to print the line at column 3, line 2, so the device driver resolves these types of differences. Instead of having to write, debug, and support all of these special device drivers, the graphics application reads from and writes to these graphics devices using a standard set of APIs, which in turn call the device driver specific to the device currently installed. Without this standardized interface, the software vendor would be forced to supply device drivers for the hundreds of different types of input and output devices. Some word processors, for example, would be forced to supply hundreds of printer device drivers to support all makes and models of printers, from daisy wheel to high-speed laser and color printers.

In summary, the device driver:

- Contains the specific device characteristics and removes any responsibility of the application program for having knowledge of the particular device.

- Allows for device independence by providing for a common program interface, allowing the application program to read from or write to generic devices. It also handles the necessary translation or conversion that may be required by the specific device.

- Serializes access to the device, preventing other programs from corrupting input or output data by attempting to access the device at the same time.

- Protects the operating system and the devices owned by the operating system from errant programs which may try to write to them, causing the system to crash.

OS/2 Overview

OS/2, introduced in late 1987, was originally called MS-DOS 4.0. It was later named MS-DOS 5.0, and finally OS/2. OS/2 was designed to break the MS-DOS 640KB real mode memory barrier by utilizing the protect mode of the 80286 processor. The protect mode provided direct addressing of up to 16 megabytes (MB) of memory and a protected environment where badly written programs could not affect the integrity of other programs or the operating system.

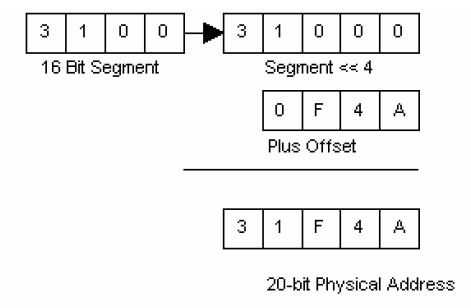

The Intel processors are capable of operating in one of two modes. These are called real mode and protect mode. One of the most popular computer operating systems, MS-DOS, runs in real mode. In real mode, the processor is capable of addressing up to one megabyte of physical memory. This is due to the addressing structure, which allows for a 20-bit address in the form of a segment and offset (see Figure 1-2).

Real mode allows a program to access any location within the one-megabyte address space. There are no protection mechanisms to prevent programs from accidentally (or purposely) writing into another program’s memory area. There is also no protection from a program writing directly to a device, say the disk, and causing data loss or corruption. MS-DOS applications that fail generally hang the system and call for a <ctrl-alt-del> reboot, or in some cases, a power-off and a power-on reboot (POR). The real mode environment is also ripe for viruses or other types of sabotage programs to run freely. Since no protection mechanisms are in place, these types of “Trojan horses” are free to infect programs and data with ease.

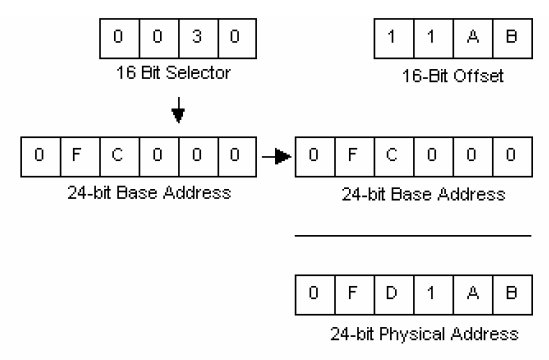

The protect mode of the Intel 80286 processor permits direct addressing of memory up to 16MB, while the Intel 80386 and 80486 processors support the direct addressing of up to four gigabytes (4,000,000,000 bytes). The 80286 processor uses a 16-bit selector and 16-bit offset to address memory (see Figure 1-3). A selector is an index into a table that holds the actual address of the memory location.

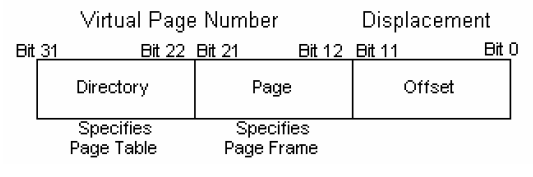

The offset portion is the same as the offset in real mode addressing. This mode of addressing is commonly referred to as the 16:16 addressing. Under OS/2, the 80386 and 80486 processors address memory using a linear address. The linear address is a 32-bit flat address consisting of three parts. The first part, which is 10 bits long, is an index into a page table referred to as the PTE. The second part, which is also 10 bits long, specifies a particular page frame within the page table. The third part is an offset into the page frame. The page information is decoded by the paging hardware located on the processor. The physical address is formed by locating the PTE, indexing to the correct page frame, and adding in the offset portion of the address. This mode of addressing is referred to as the 0:32 or flat addressing (see Figure 1-4).

The protect mode provides for hardware memory protection, prohibiting a program from accessing memory owned by another program. While a defective program in real mode can bring down the entire system (a problem frequently encountered by systems running MS-DOS). A protect mode program that fails in a multitasking operating system merely reports the error and is terminated. Other programs running at the time continue to run uninterrupted.

To accomplish this memory protection, the processor keeps a list of memory belonging to a program in the program’s Local Descriptor Table, or LDT. When a program attempts to access a memory address, the processor hardware verifies that the address of the memory is within the memory bounds defined by the program’s LDT. If it is not, the processor generates an exception and the program is terminated. The processor also keeps a second list of memory called the Global Descriptor Table, or GDT. The GDT usually contains a list of the memory owned by the operating system, and is only accessible by the operating system and device drivers. Application programs have no direct access to the GDT except through a device driver.

OS/2 was designed with several goals in mind. First, OS/2 had to provide a graphical user interface that was consistent across applications and graphics hardware.

Second, OS/2 had to support Dynamic Linking. With Dynamic Linking, some functions required by an application can reside in a separate file, which is loaded only at run time. This feature makes application file sizes smaller, allows more than one client to use the Dynamic Link Library (DLL), and allows functionality to be placed in the DLL without changing the application code base. The majority of OS/2 is implemented in DLLs.

Third, OS/2 had to provide an efficient, preemptive multitasking kernel. The kernel had to run several programs at once, yet provide an environment where critical programs could get access to the CPU when necessary. OS/2 uses a priority-based preemptive scheduler. The preemptive nature of the OS/2 scheduler allows it to “take away” the CPU from a currently running application and assign it to another application.

OS/2’s smallest granularity of execution is the thread, which is an instance of execution. Processes consist of one or more threads, and each thread can execute at its own priority. OS/2 has four priority classes with 32 levels within each priority class. Threads with higher priority can interrupt the execution of lower priority threads.

Fourth, OS/2 had to provide a robust, protected environment with virtual memory. OS/2 uses the protect-mode of the 80286 and above processors, which has built-in hardware memory protection. Applications that attempt to read or to write from memory that is not in their specific address space are terminated without compromising the operating system integrity. OS/2 uses an efficient memory allocation and paging scheme consisting of a combination of first-fit, Least Recently Used (LRU), and compaction to minimize fragmentation.

Fifth, OS/2 had to support the older 16-bit protect-mode applications that used the segment:offset addressing scheme as well as new, 32-bit flat model executables. OS/2 offers a rich set of Application Program Interfaces (APIs) to allow programs to access system services. The OS/2 APIs are classified into eight major categories; file systems, graphics interface, inter-process communications, systems services, process/thread management, memory management, signals, and dynamic linking.

Finally, OS/2 had to run most MS-DOS programs in an MS-DOS-compatibility mode. OS/2 allows MS-DOS programs to run in their own one megabyte of virtual memory space, providing protection from other MS-DOS or OS/2 programs.

At the time OS/2 was written, the most powerful mainstream processor available for the PC market was the Intel 80286. At that time, memory was still quite expensive, and most PC systems were shipped with a maximum of 4MB of memory installed. Accordingly, the operating system and support code was written using 16-bit tools that produced smaller and faster code. Because of space and speed considerations, a majority of the OS/2 kernel and supporting DLLs were written in assembly language.

After Microsoft and IBM split on the direction for OS/2, IBM embarked on a project to convert 16-bit OS/2 into a 32-bit operating system. This was now possible for two reasons. First, the mainstream processor shipped with Intel-based PCs was the 80386, a full 32-bit processor. Second, the price of memory had dropped dramatically, and most systems were now shipped with up to 8MB of memory (Converting any 16-bit program to 32 bits always caused an increase in size and execution time. Contrary to popular belief, few, if any 32-bit programs run as fast as their 16-bit counterparts).

During this conversion, IBM elected to make a radical change in the user interface rather than to rewrite the OS/2 kernel. This was done for two reasons. The first was that like most companies, they had limited programming resources that could be brought to bear on the rewrite. The second reason was that OS/2 device drivers were difficult to write, and IBM wanted to retain support for any existing 16-bit device drivers that had already been written. All of the system’s internal data structures were byte or word aligned, the scheduling, dispatching, and virtual memory data structures were all written in assembly language, and accessed via 16-bit selector:offset addresses. The file system, the heart of the device driver interface, was also written entirely in 16-bit code. IBM made the decision to work on the area of OS/2 that would make the most visual impact while leaving all of the internal plumbing untouched. This turned out to be a serious long-term error in judgement.

For tools, IBM used the Microsoft 16-bit assembler and Microsoft 16-bit C compiler. For the few 32-bit components that were part of OS/2, IBM used an unreleased 32-bit version of Microsoft’s C compiler. Worse, some components would compile and link only with certain versions of the assembler or compiler. Microsoft, seizing a golden opportunity, discontinued the availability of the 16-bit tools and never released the 32-bit version of their 32-bit C compiler. This brought a grinding halt to mainstream device driver development, relegating it instead to a few independent driver development shops that still had a license to the old tools. Today, almost seven years later, OS/2 still suffers from those fateful decisions to leave the file system and device driver interface untouched.

The Proposal

This proposal suggests a new flat-model base device driver model written in C. Appendix A also suggests a new base driver model written in C++ utilizing Object Oriented (OO) design techniques (We have not included Presentation Device Drivers, drivers for printers, displays, and other bit-mapped devices in this discussion. Under the current architecture, there is nothing to prevent these drivers, which are essentially DLLs, from being written in 32-bit code. They should, however, be able to be dynamically loaded and configured similar to base device drivers.). OO purists may find some of the C++ code and techniques objectionable because they don’t adhere to a strict OO paradigm. However, it is our observation that interaction directly with the hardware requires a somewhat procedural approach, even if an object oriented language is used. Using C++ presents some possible problems, such as the timely collection of dead objects from the system heap. In OS/2, the system heap is swappable, which may lead to several page faults when destructors are called or during system heap compaction.

We have chosen C because of its universal acceptance and its ability to easily perform low-level functions. In Appendix A, we present a sample driver written in C++. Although some C++ programmers may find this model more “comfortable”, we see no advantage in using C++ over conventional C. The allocation of objects in a device driver is usually done at load time, and the resources remain locked while the device driver is loaded in memory. If, for example, the driver needed to access a buffer at interrupt time, it would not be possible to allocate the buffer or swap it in off the disk in time to service the interrupt, so the buffer must remain in memory. Also, C++ calls object destructors automatically, which might result in an object going out of scope at just the wrong time. This could cause intermittent or fatal errors, and would be difficult to track down. A feature of C++ is the ability to return to the heap the space that was allocated by objects that have since gone out of scope. The ability to perform timely garbage collection and heap compaction can be critical for the proper operation of a C++ device driver. Because there are some issues related to C++ that we are not sure can be easily solved, we decided to use C for the purposes of this design.

Our proposal intends to solve the following problems:

- Currently, device drivers are loaded in the order that they appear in the CONFIG.SYS file. This can cause conflicts in certain hardware configurations where port or memory addresses may overlap.

- In the current implementation of OS/2, no file I/O services are available from within the driver (A limited number of file system APIs are available during driver initialization.). There is no method of logging activity or events during driver execution.

- There are no profiling services available at the driver level.

- There are virtually no supported 16-bit compilers and assemblers with which to build new device drivers.

- Device drivers are currently unable to allocate more than 64KB of contiguous physical memory.

- To use DMA, the driver must write directly into the 8237 DMA controller registers, perform several memory allocations until a memory object is allocated on the correct segment boundary and paragraph alignment, and double-copy data if the application buffer is above the 16MB boundary. ( For this functionality we propose to provide a set of driver-callable DMA utility functions which will perform these functions transparently.)

- Device drivers are loaded statically by references in the CONFIG.SYS file. The drivers must be able to be loaded dynamically or “as needed”.

- Drivers are loaded in low memory, below the 640KB boundary limiting the size and number of device drivers that can be loaded at boot time.

To satisfy these requirements, we have made the following assumptions:

- The file system will be rewritten to handle the proper device driver calls. This might include a 32-bit to 16-bit thunk layer or memory aliasing to provide a seamless interface to the 32-bit device driver. In other words, the 32-bit device driver should be able to be installed, configured, run, and deinstalled without being aware if the underlying architecture is 16-bit or 32-bit.

- The file system will be modified to accept long device names. Currently, OS/2 supports only 8 character device names, a relic from the old 8.3 file naming conventions.

- The system will be modified to support a persistent data store or registry for supported devices, either installed or uninstalled.

- The system will correctly handle mapping or aliasing pointers passed by 16-bit applications inside the request packet. It is the programmer’s responsibility to handle any imbedded pointers in private data buffers shared between the application and the device driver.

- The system will be modified to add the DeInstall command that removes the driver from the system, freeing up any resources owned by the driver (This may prove to be very difficult, as the driver may be hung in a state, which can’t be modified. In this case, rebooting may be necessary anyway).

- OS/2 will provide a configuration utility to examine and modify the persistent configuration store or registry to permit legacy device settings to be stored.

- OS/2 will be modified to allow access to the least significant bits of the 8254 timer to provide a reasonable granularity for the driver profiling services.

- The OS/2 system loader will be modified to load the new device driver model. This loader should operate both at boot time and later when a device driver is dynamically loaded. We think this effort will be a major task.

- Wherever possible, the new driver model shall incorporate code, functions, subroutines, and macros from the existing 16-bit device driver model to ease the conversion of older drivers. The conversion of older drivers should be easy and straightforward, and if possible, should follow the architecture of the existing 16-bit model. (In some cases, this may not be possible, although a large portion of the actual source code may be easily transportable to the new architecture.)