Guide to Multitasking Operating Systems:Overview of the OS/2 Operating System

This section covers, in depth, the concepts brought forth in the previous section Fundamentals of Operating Systems with respect to the OS/2 operating system.

Introduction to OS/2

OS/2 Process Management

This topic deals with how OS/2 multitasks, or runs many threads of execution at the same time.

Introduction to OS/2 Process Management

In any multitasking operating system, the hardware is managed as shared resources to be distributed among concurrently executing entities. The architecture that describes how these concurrent entities are created, terminated, and managed is called the tasking or multitasking model. The multitasking model describes how resources-such as the processor, memory, files, devices, and interprocess communication structures-are shared in the OS/2 system.

Perhaps the most important resource that is shared in a multitasking environment is the processor. The operating system shares the processor among concurrently executing entities using a technique known as time-slicing. An operating system that provides timeslicing switching between programs. enabling each program to run for a short period of time called a time-slice or quanta. This technique results in the processor resource being shared among the programs.

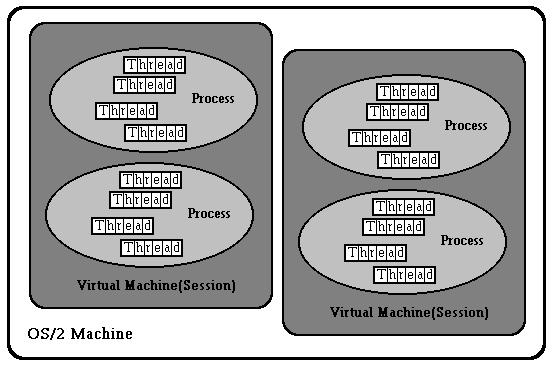

The OS/2 multitasking model consists of a hierarchy of multitasking objects called sessions, processes, and threads The session is at the top of the hierarchy, and the thread is at the bottom. The session is the unit of user I/O device sharing. Processes are analogous to programs, and are the unit of sharing for such resources such as memory, files, semaphores, queues, and threads. A thread is the basic unit of execution, and a single process may have multiple threads that execute concurrently within it.

OS/2 Sessions

Each session contains a logical video buffer, logical keyboard, logical mouse, and one or more processes that are said to be running in or attached to the session. The logical devices are per-session representations of the physical devices. Processes running in the session perform their user I/O in the session's logical devices. Only one session at a time has its logical devices mapped onto the physical devices; this session is called the foreground session. The other sessions are in the background. Users can change the current foreground session by issuing the commands to switch between sessions with a keyboard or a mouse. Sessions are used to provide an infrastructure for program management and user I/O device sharing.

You can think of the session as providing a virtual personal computer to certain groups of processes as OS/2 maintains: a logical video buffer which is a shadow of the screen contents, a keyboard input queue, and a mouse event queue. Processes running in sessions see a dedicated keyboard, video buffer, and mouse to which API calls can be made at any time.

OS/2 Processes

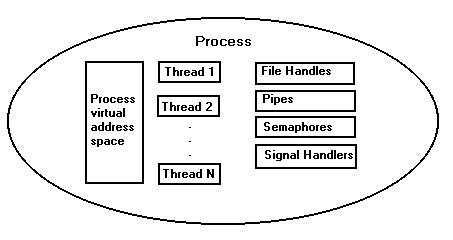

A process is the basic unit of programming and resource sharing in OS/2. A process corresponds to a program and is created when a program is loaded. A process is the central abstraction for the sharing of resources, such as processors, memory, files, and interprocess communication data structures. Each process is assigned a unique process identifier(PID) by the kernel. OS/2 1.X provides support for up to 255 processes; OS/2 2.0 provides support for up to 4095 processes.

The system maintains many resources on a per-process basis. The primary resources contained in a process are the memory domain and the threads of execution. A thread provides a sequence of instructions with an instance of execution. All proceesses are created with one thread and have the capability of creating more threads. The threads within a process all share the processes resources and have access to one another. Although memory and threads are the main features within a process, the system also tracks many other resources on a process basis, such as signal handlers, open files, and interprocess communication features such as semaphores, queues, and pipes.

OS/2 Threads

Threads are the dispatchable units within an OS/2 process, In other words, processes really do not run, but threads do. A thread provides within a process a piece of code with an execution instance. Each process in the system has at least one thread. From the user's perspective, a thread's context consists of a register set, a stack, and an execution priority.

Threads share all the resources owned by the process that creates them. All the threads within a process share the same virtual address space, open file handles, semaphores, and queues. Each thread is in one of three states: running, ready to run, or blocked. Only a single thread in the system is actually in the running state on uni-processor harware platforms. The running thread is the ready-to-run thread that is currently selected to run according to the OS/2 priority scheme. Threads that are in the blocked state are awaiting the completion of an event.

When OS/2 switches between threads, it automatically saves the context of the current running thread and restores the context of another thread that is ready to run. This is called a context switch.

There are several advantages to a multi-thread process model over the traditional single-thread process model found in many systems such as UNIX. Since threads share the process's resources, thread creation is far less expensive than process creation, and threads within a process enjoy a tightly coupled environment. When a thread is created, the system doesn't have to create a new virtual address space or load a program file, resulting in an inexpensive concurrent execution path.

OS/2 Scheduling

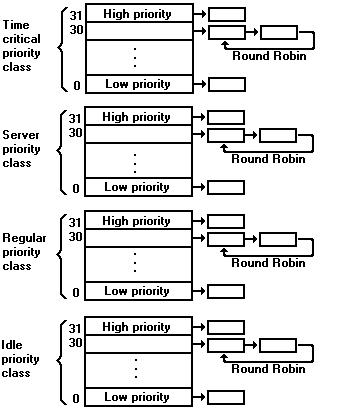

All threads in the system compete for processor time. To determine which threads should run, OS/2 implements a multilevel priority architecture with dynamic priority variation and round robin scheduling within a priority level. Each thread has its own execution priority, and high-priority threads that are ready to run are dispatched before low-priority threads that are ready to run.

There are four priority classes in the OS/2 system: time critical, server, regular, and idle. The server class is also called the fixed-high priority class. Each priority class is further divided into 31 priority levels.

Threads in the highest, or time-critical, priority class, have timing constraints. An example of a time-critical thread is a thread that waits for data to come from a device driver monitoring a high speed communications device. The system guarantees that there is a maximum interrupt disable time of 400 microseconds, and that time-critical threads are dispatched within 6 milliseconds of becoming ready to run. These timing criteria ensure that the system can respond rapidly to the needs of time-critical threads, and also be flexible enough to allow a user to switch between programs quickly. Most threads in the system, however, are in the regular priority class.

The server priority class is used for programs that run on a server environment that need to execute before regular priority class programs on the server. The server class ensures that client programs relying on the server do not suffer performance degradation due to a regular class program running locally on the server itself.

Threads in the idle priority class will run only when there is nothing to run in time-critical, server, or regular priority class. Typically, idle-class threads are daemon threads that run in the background. A daemon thread is one that intermittently awakens to perform some chores, and then goes back to being blocked.

The scheduling algorithm is round-robin within the same priority level. For example, if five threads have the sam priority, the system will run each of the five, one after another, by giving each one a timeslice. The timeslicing is driven by a system clock, and the user can configure the timeslices from 32 to 248 milliseconds by using TIMESLICE in the CONFIG.SYS file. A thread runs for its entire timeslice unless an interrupt occurs that results in making another thread of a higher priority class ready to run. In such cases, the running thread is preempted. Otherwise, a thread runs for the length of its timeslice, unless it calls the kernel and blocks.

OS/2 Process Coordination

Interprocess Communication is a corollary of multitasking. Now that PC's can have more than one program running at the same time, there will be most likely be a need for those programs to exchange information and commands. OS/2 provide s the facilities for separate programs, each running in their own address space, to communicate information through system supported interprocess communication protocols(IPC's). Some of the IPC's can operate across machine boundaries and are key to the development of LAN-based client-server applications. OS/2 provides a rich set of IPC's. These include: Anonymous Pipes, Named Pipes, Queues, and Shared Memory.

An Anonymous Pipe is a fixed length circular buffer in memory that ca n be accessed like a file of serial characters through a write handle and a read handle(handles are pointers into the file). Anonymous pipes are used mostly by parent processes to communicate with their descendants by passing the pipe handles through inheritance.

Named Pipes provide two-way communications among unrelated processes either locally or remotely. The server side of an OS/2 process creates the pipe and waits for the clients to access it. Clients use the standard OS/2 file services to gain access to the named pipe. Multiple clients can be serviced concurrently over the same pipe.

A Queue allows byte stream packets written by multiple processes to be read by a single process. The exchange does not have to be synchronized. The receiving process can order the access to the packets or messages in one of three modes: first-in/first-out(FIFO), last-in/first-out (LIFO), or priority. Items can be retrieved from the queue either sequentially or by random access. The OS/2 queues contain pointers to messages, as opposed to a copy of the data itself. Shared Memory is another interprocess communication protocol. OS/2 provides facilities for the creation of named shared segments. Any process that knows the named memory object has automatic access to it. Processes must coordinate their access to shared memory through the use of synchronization techniques.

Interprocess Synchronization. Interprocess synchronization is another corollary of multitasking. It consists of the mechanisms for keeping concurrent processes for threads from interfering with one another when accessing shared resources. The idea is to serialize the access to the shared resource by using a protocol that all parties agree to follow. OS/2, like most operating systems, provides an atomic service called the the semaphore which applications may use to synchronize their actions.

OS/2 Memory Management

This topic deals with how OS/2 manages memory.

Introduction to OS/2 Memory Management

Memory management is the way in which an operating system allows applications to access the system's memory, either for private use by a single application or to be shared between applications. In either case, it is the responsibility of the operating system's memory management component to supervise the correct use of memory and to ensure that no application gains access to memory outside its own address space. It also includes the way in which memory is allocated. The operating system must check how much memory is available to applications, and handle the situation when there is no longer any real memory left to satisfy an application's requests.

Previous versions of OS/2 were based upon the Intel 80286 processor architecture. In this architecture, there is a limitation on the amount of memory that can be addressed as a single unit. This is due to the fact that memory is managed in segments of up to 64KB in size. Previous versions maintained a series of descriptor tables for memory segments, and 16 bits were allocated in each table entry for the length of the segment. Thus, each descriptor table entry gave access of up to 2^16 = 64KB in size. If the need arose for more than 64KB to be used for a single memory object or data structure, the programmer and the operating system had to take this limitation into consideration, and implement appropriate algorithms to use multiple memory segments for a single logical structure.

OS/2 2.0, however, is based upon the Intel 80386 processor architecture. This processor has a 32-bit addressing scheme in place of the 24-bit overlapped scheme used in the 80286, thereby giving access to 2^32 = 4GB of memory in a single logical unit.

In the 80386 architecture, memory is split into fixed size units of 4KB. All memory allocation, addressing, swapping, and protection is based on pages. As with previous versions of OS/2, the total memory, allocated to all processes running in the system, may exceed the physical memory available. Memory objects or parts of memory objects, which are not reuired by the currently executing process, may be temporarily migrated out to secondary storage(disk). When used with a paged memory management scheme, this procedure is known as paging. An application may request a large amount of memory, in which case, multiple pages are allocated. However, since virtual memory is managed on a page-by-page basis, such units of storage may now exist partly in real memory and partly in a file on disk, thereby significantly easing the constraints on memory overcommittent.

OS/2 1.3 moved complete segments between main memory and the swap file. The fact that segments were variable in length complicated the management of both main memory and space in the swap file. There was also the requirement to regular memory compaction to reclaim the gaps, which formed when memory was freed. Under OS/2 2.0, in most cases there is no requirement to find contiguous pages in memory to satisfy an allocation request. Consequently, there is no need to move pages around in memory. The exception to this is the need for buffers used in DMA I/O transfer, which must be in contigous locations in memory.

Flat Memory Model

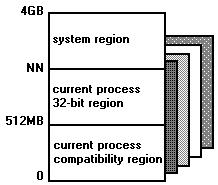

The memory model used by OS/2 2.0 is known as the flat memory model. This term refers to the fact that memory is regarded as a single large linear address space of 4GB, using 32 bits for direct memory addressing. This view applies for each and every process. Memory addresses are defined using a 32-bit addressing scheme, which results in a linear address space of 4GB in size.

Like the 80286 processor, the 80386 also supports a segmented memory model, except that in the case of the 80386 the maximum segment size is 4GB. While the 80386 processor does not explicitly provide a facility for disabling the segmented memory model, OS/2 2.0 implements the flat memory model by mapping the 4GB address space as a single code segment and a single data segment, each of which has a base address of zero and a size of 4GB. Only two segment selectors are therfore required in the system; an executable/readable code segment in the CS register, and a read/write data segment in the DS, ES, and SS registers. These selectors are known as aliases, since they all map to the same linear address range.

The 32-bit addressing scheme used by OS/2 2.0 is referred to as 0;32, or "Motorola mode", in order to differentiate it from the 16-bit segmented addressing scheme used by pevious versions of OS/2, which will be referred to as 16;16. These terms reflect the fact that the older segmented memory model uses a 16-bit segment selector and a 16-bit offset to refer to a specific memory location, whereas the newer flat memory model has no need of a segment selector, and simply uses a 32-bit offset within the system's linear address space.

The system's global address space is the entire 4GB linear address space. Each process has its own process address space, completely distinct from that of all other processes in the system. All threads within a process share the same process address space. This address space is also theoretically 4GB in size. However, the maximum size for process address spaces is defined at system initialization time and is somewhat less than 4GB, to allow space for memory used by the operating system.

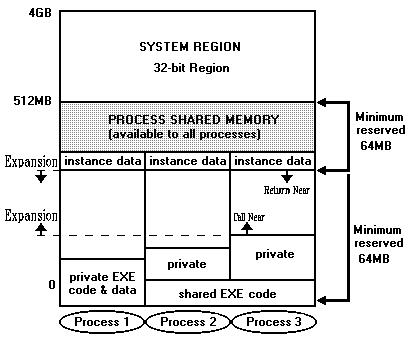

Shown below is the mapping of a process address space into the system's global address space. The NN shown represents the maximum defined linear address space of the process address space, set at initialization time. OS/2 2.0 sets this limit to 512MB, reserving the linear address range above this point for operating system use. The space above 512MB is known as the system region

Note: The 32-bit region within the process address space is not used by OS/2 2.0, since "NN" is set to 512MB.

This limitation on the size of the process address space si used by the operating system to ensure protection of the system region from access by applications.

Conceptually, the process address space is divided up into two different regions, as shown above. One of these regions may be accessed by both 16;16 and 0;32 applications, known as the 16/32-bit region or compatibility region. The other region is accessible by 0;32 applications only, and is known as the 32-bit region.

The 16;16 addressing scheme allows access of up to 512MB per process, since the local descriptor tables used in this model contain up to 8192 entries, each of which can point to a segment of up to 64KB in size. In order to ensure that there is no problem in coexisting 16-bit and 32-bit applications under OS/2 2.0, the maximum size of the process address space has been set at 512MB. This means that all memory in the process address space can be addressed using either the 16;16. or 0;32 addressing scheme. This capability is important since it allows applications to be composed of mixed 16-bit and 32-bit code, allows 32-bit applications to make function calls to 16-bit service layers, and permits 16-bit applications written for OS/2 1.X to run unmodified, effectively allowing a "hybrid" memory management environment.

The 32-bit flat memory model greatly simplifies the migration of 32-bit applications to OS/2 2.0 from other operating system platforms, and the migration of OS/2 2.0 applications to other platforms. This is in contrast to the segmented memory model implemented by the 80286 processor. All the features described above arise from the fact that a flat linear memory model is used, taking advantage of the advanced features of the 80386 processor. The paging scheme is more general than the segmentation scheme used by the 80286, and the flat memory model will facilitate any future migration other than the Intel 80x86 family.

Memory Objects

The smallest memory unit in the flat model is a page(4KB on the 80386), compared to a byte in the 16-bit segmented model. Thus, the flat model is page granular. A memory object is not a segment in the flat model, but rather is a range of contiguous linear pages within the process virtual address space. The base address of a memory object is aligned on a page boundary, and the size of a memory object is a multiple of the page size. Unlike in the segmented system, all memory objects are addressable simultaneously. No segment registers need to be loaded, which results in optimal performance for an 80386 in protected mode.

Virtual Memory Management

The virtual address space is split into two regions:

- System Region This is the region above the 512MB level, which is only accessible to tasks running at the operating system privilige level.

- Process Region This is the first 512MB of the virtual address space and only memory objects in this region are mapped into a process's address space when that process is running at user privilige level. Each process present in the system has its own mapping of this region. The process region is further split up into:

- A shared area - This is used to hold shared memory objects such as DLL code and shared data areas.

- A private area - This contains EXE code and private process data.

In order to manage virtual memory, OS/2 2.0 uses the concept of an arena. There are three types of arenas in the system:

- The system arena

- The shared arena

- Per-process private arenas

Associated with each arena is the virtual address space, which it maps. The system arena contains all of the memory objects that are in the system region. It maps the virtual address space between 512MB and 4GB. The shared arena describes all the shared memory objects in the process region.

Each process has its own private arena, which contains EXE code and the process's private data. The private arena starts at the lowest address of the process region's virtual address space and has a minimum size of 64MB. A program loaded into the address space will be loaded at the low end of the address space. Because of this, a particular EXE will always occupy the same range of addresses. If a program is used by more than one process, it is possible to share one copy of the program code.

Each process has its own address space, which maps memory objects in the process's private arena and the shared arena. Only those objects in the shared arena, which a process requires access to and is authorized to access, will be mapped into the process's address space.

Both private and shared storage for memory objects may be allocated within each arena. For example, DLL instance data is located within the shared memory arena, but each instance of the data uses a separate memory object in order to preserve data integrity, and hence each memory object is treated as private storage. Although separate memory objects, they each map to the same range of addresses in the shared arena. The table below shows the types of storage(private or shared) available within each memory arena, and the uses to which these types of storage may be put by applications.

+----------------------+----------------------+----------------------+ |Arena |Private Storage |Shared Storage | +----------------------+----------------------+----------------------+ |Private |EXE read/write data |Shared run-time data | | |Process run-time data |Shared DLL data | +----------------------+----------------------+----------------------+ |DLL instance data |DLL code and global | | | |data | | +----------------------+----------------------+----------------------+

Memory Object Classes. This table shows the way in which memory objects may be placed in shared or private storage.

Note : Code includes read-only objects such as Presentation Manager resources

Page Attributes

An application may specify the types of access permitted for memory objects when those objects are allocated, thereby ensuring the proper use of each memory object. The type of access for individual pages within the memory object may be altered subsequent to allocation. The attributes available for memory objects and their component pages are:

- Commit: The pages within a memory object must be committed in order to be used for read or write operations. Until it is committed, the system merely reserves a linear address range without reserving physical storage. The committing of a page obtains a page frame for the page.

- Read: Read access to the page is allowed. All other access attempts will result in a page fault.

- Write: Write access to the page is allowed. Write access implies both read and execute access.

- Execute: Execute access to the page is allowed. Execute access implies read access.

- Guard: When an application attempts to write into the guard page, a guard page fault exception is generated for the thread that referenced the guard page. This exception can be handled by an application-registered exception handler for this thread.

- Tile Defining a memory object to be tiled causes it to be placed in the compatibility region and mapped using the 16:16 addressing scheme, even though the object may be used by a 0:32 process.

Specifying this attribute has no effect under OS/2 2.0 as all application created storage must reside below the 512MB address limit, and is therefore in the compatibility region. This attribute is provided to future applications to be developed that will be forwardly compatible with future versions of the operating system. It is likely that in a future release of OS/2, the 32-bit region (above 512MB) will be enabled for application use. This attribute must then be specified for a memory object that will be used by 16-bit code.

The 80386 processor does not distinguish between read and execute access. The one implies the other. Write access implies both read and execute access.

Memory Protection

With the flat memory model, OS/2 2.0 implements memory protection using two machine states(user and supervisor) and by providing separate address spaces for the supervisor and each of the processes running in the system. The global address space encompasses the entire linear address space and consists of the system region and the current process's address space. The global address space is only accessible when the processor is running at ring 0, which is reserved for the operating system. All other processes run in ring 3(privilige level 3). While executing at ring 3, the system region is not visible to the current process. Neither are the address spaces of any of the other processes running in the system are accessible.

Since memory is managed by the operating system on a page-by-page basis, even the allocation of a 1 byte memory object will actually reserve a full page(4KB) in memory. Furthermore, as the memory protection scheme has also changed under OS/2 2.0, a memory reference outside the expected range but within the 4KB page boundary will not give the Trap 000D segmentation violation experienced in previous versions of OS/2/ Instead, an exception is generated only when an invalid page is referenced or an invalid access occurs (such as a write operation to a page previously declared as read-only). an invalid page is a page that has not been committed in the process address space or is outside the limit of the address space.

Here is where we see a major difference between the segmented memory model and the linear or flat memory model. A 32-bit program can address the entire 4GB address space with a 32-bit offset. Memory is seen as a single continuom. 16-bit applications see memory as discrete areas with their own defined size. 16-bit applications running under OS/2 2.0 are subject to segment limit checking and generally behave as they did under previous versions of OS/2. The discussion here refers to 32-bit applications.

For example, an application may request the allocation of 1KB of memory; the operating system will allocate a full 4KB page. The application can then write up to 4096 bytes of data into the memory object, and the operating system will not detect an error. However, if the application attempts to write 4097 bytes into the memory object, a general protection exception (Trap 000D) may occur. Such an exception is only generated when the next page in the process address space is invalid. If the next page exists in the process address space, no exception is generated.

Note that any of these exceptions may be trapped and processed using exception handlers registered by the application.

Since 32-bit programs can address the entire address space with a 32-bit offset, it is easier for 32-bit programs to corrupt data in the shared region than for 16-bit programs. OS/2 2.0 provides a facility for DLL routines to have their shared data areas allocated in a protected area of memory, which is not accessible to 32-bit programs, thereby providing a level of protection. There is a new PROTECT option on the MEMMAN statement of the CONFIG.SYS, which is used to enable memory protection for DLLs.

Physical Memory Management

The previous discussion has concentrated on the application's view of memory management. Applications running under OS/2 2.0 need not even be aware of the mechanism through which the 0;32 addressing scheme is implemented; the application only deals with 32-bit addresses, and is not concerned with the way these are mapped to physical addresses.

When using the flat memory model, the 80386 processor regards each memory address as a 32-bit address, seen solely as an offset as an offset from address 0 into a linear address space. If the 80386 processor is running with paging disabled, this linear address and the physical address in memory are equal. Physical memory is now divided into 4KB blocks, which are allocated as required to processes running in the system. There is no direct correlation between the linear addresses of a page and its address in physical storage. In fact, not all pages in the linear address space will be represented in physical memory. The 80386 processor has a paging subsystem, which handles the conversion of the linear address into a physical address and also detects situations where there is no physical page corresponding to a page in the linear address space.

Address Translation

With paging enabled, the 80386 processor maps the linear address using an entry in the page directory and an entry in one of the page tables currently present in the system. The page directory and page tables are structures created in the linear address space. The address translation process is shown below. The linear address is split into three parts:

- Page Directory Entry or PDE(10 bits)

- Page Table Entry or PTE(10bits)

- Page Offset or PO(12 bits)

OS/2 2.0 maintains a single page directory for the entire system; the entries contained within the page directory are unique for each process in the system, and are copied into the page directory by the operating system when a task switch occurs. The page directory entries contain pointers to the page tables, which in turn point to the memory pages (both shared and private) belonging to the current process. The page directory and each page table are defined to be 1 page(4KB) in size, and are aligned on 4KB page boundaries. There is a maximum of 1024 entries per page table, and a maximum of 1024 page tables per page directory. Since each page is 4KB in size, this means a single page table gives access to 4MB of memory. 1024 page tables, the maximum, gives access to the full 4GB global address space.

The format of page directory entries and page table entries are identical. The upper 20 bits in each page directory entry specify the address of the page table, and the lower 12 bits are used to store control and status information. This 20-bit address is possible since each page table is aligned on a 4KB boundary. Hence, the lower 12 bits are assumed to be zero for addressing purposes; these 12 bits are in fact used to contain control and status information. A page table contains entries pointing to the physical memory location of the page.

The address resolution may appear complex, but in fact very little overhead is involved since the 80386 maintains a cache buffer for page table entries, known as the translation lookaside buffer (TLB). The TLB satisfies most access requests for page tables, avoiding the necessity to access system memory for PDEs and PTEs.

When page table entries are changed or during a task switch, the TLB must be flushed in order ro remove invalid entries. Otherwise, invalid data might be used for address translation.

For each page frame, a bit in the page table entry known as the present bit indicates whether the address in that page table entry maps to a page in physical memory. When the present bit is set, the page is in memory. When the present bit is clear in either the page directory or in the page table, a page fault is generated if an attempt is made to use a page table entry for address translation.

Managing Paging

Pages can have the following types:

- Fixed These are pages that are permantly resident in storage. They may not be moved or swapped out to secondary storage.

- Swappable When there is a shortage of physical memory, these pages can be swapped to disk.

- Discardable If it is possible to reload these pages from either an EXE or a DLL file. When memory becomes overcommitted, space used for discardable pages can be freed up, and when the pages are required again they are loaded from the original file.

- Invalid These are the pages that have been allocated but not committed.

The operating system needs information over and above that contained in the page directories and the page tables to manage the paging process. OS/2 2.0 builds three arrays of data structures that represent:

- Committed pages in the process and system process address space

- Pages in physical memory

- Pages held on secondary storage

The following sections describe these arrays and the way in which OS/2 2.0 uses them.

Virtual Pages

A virtual page structure(VP) is allocated whenever a page is committed in response to an application request. No physical memory is allocated for the page at this time. The PTE is updated to point to the VP but the present bit in the page table is not set. When the page is first referenced, a page fault occurs. The allocation of physical memory is left to the last possible moment.

The virtual page structure describes the current disposition of a page. When a page fault occurs, the virtual memory manager obtains the address of the VP from the page table entry, and uses the information held in the VP to determin the required action which must be taken to resolve the page fault. The possible actions are:

- The page manager will provide a page, initialized to zeros if required.

- The page will be loaded from an EXE or DLL file.

- The page will be loaded from the swap file on secondary storage.

If the page is to be loaded from an EXE or DLL, the VP pointer contains a pointer to the loader block. If from the swap file, it points to a page in the swap file.

Page Frames

A page frame(PF) exists for each page of physical memory in the system. Page frames are stored in an array, which is indexed by the physical frame number within the system.

A page frame may have one of three states:

- Free, in which case the page frame is available for allocation to a process in the system. The page frame addresses of all the free pages in the system are held in a doubly linked list known as the free list, with PFs for fast planar memory at one end of the list, and PFs for the slower memory on adapters at the other end. This permits the allocation of faster memory before the slower memory.

- In-use, in which case the page frame has been allocated to the current process in the system.

- Idle, in which case the page frame has been allocated to a process, but no page table entries for the current process reference this frame. This lack of reference normally indicates that the process, which is using the page, has been switched out; that is, the process is not the current process.

When the system is overcommitted, the number of free and idle page frames begin to fall. When it reaches a threshold level, pages are migrated from the in-use state to the idle state by a page ager. The page ager looks for pages that have not been accessed since the last time the page ager ran. The ager examines the accessed bit in the PTE. If clear, it marks the page not present. If a page is referenced by more than one PTE, a shared page, all PTEs must be marked not present before the page is placed on the idle list. The idle list is also a doubly linked list and least recently used (LRU) entries are at one end of the list and most recently used (MRU) entries are at the other end of the list.

Pages are also classified as dirty or clean. A dirty page is one that has been written to and must have its contents swapped to disk before it can be allocated to another proces. A clean page does not need to be swapped out, since it typically contains code or read-only data, which can be reloaded from the original file on disk. It could also be that a copy of the page currently exists in the swap file.

Placing a page frame on the idle list does not destroy its contents. The page frame is only reused when the operating system is forced to steal a page frame from the idle list in order to accommodate the loading of a page after a page fault. The swapping of an idle swappable page to disk is also usually delayed until there is a need to reuse the page frame to satisfy a page fault. To take advantage of the capability of certain I/O adapters to chain operations, other pages on the idle list may be swapped out at the same time.

Swap Frames

A swap frame(SF) is similar to a page frame except that an SF refers to a slot in the swap filem which can be used to hold a page when main storage becomes overcommitted. The swap frame array is used to control allocation of space in the swap file. If the SWAP option is present on the MEMMAN statement in the CONFIG.SYS, the SWAPPER.DAT file will be created in the directory pointed to in the SWAPPATH statement. The initial size of the file is 512KB.

The size of the swap file is determined by the amount of memory overcommitment in the system. The algorithm used in this calculation takes into account the amount of storage needed for all the fixed pages and swappable pages in the system and the amount by which this exceeds the physical storage installed in the system. The memory overcommitment is recalculated each each time pages are committed. It should not be necessary to increase the size each time pages are committed because of the fact that increases to the swap file will always be in steps of 512KB.

However, allocation of VPs to SFs does not take place when the page is committed. All the operating system ensures at this time is that there will be space in the swap file for the page when it becomes necessary to swap it out. The SF is allocated to the page when it is first selected to from the idle list for swapping. When the page is swapped back in, the SF will not immediately be freed up, but a link to the VP is maintained. If the page is then again selected for swapping out before it has been changed, it will not be necessary to write it to disk as a copy of it exists in the swap file.

The OS/2 2.0 swap file can also decrease in size. Decrements to the size of the swap file will be in 512KB blocks. When the overcommitment calculation indicates that the swap file is too large by one or more multiples of 512KB, the area at the end of the swap file is marked for shrinking. No new allocations of SFs in the area marked for shrinking will take place. When all SFs in the area marked for shrinking are free, the swap file is reduced in size. No attempt is made to force the freeing of SFs in this area; consequently, there can be a delay in the swap file becoming eligible for shrinking and the shrinking actually taking place.

Processing Page Faults

When a process attempts to access a page, for which the present bit in the PTE is not set, a page fault occurs. The page fault is passed to OS/2 2.0's page fault handler, and the following sequence of events takes place:

- A PF is allocated from the free list or the LRU end of the idle list. Should the PF be taken from the idle list, and its current contents be marked as "dirty", it will be necessary to write the page to the swap file.

- Once the PF is available, its contents will be loaded based on information contained in the VP. The source can be one of the following:

- If the page is marked "allocate on demand", the physical memory manager will provide the page. If requested, the page is initialized to zeros.

- If the page is discardable, it is reloaded from an executable file on disk.

- If the page is swappable, but is currently on the idle list, it can be reclaimed because it is still present in memory.

- If the page is swapped out, it is reloaded from the swap file.

- The PTE is updated with the PF address and the present bit is set.

- The TLB is flushed.

- The program instruction that caused the exception is restarted.

Thunking

OS/2 I/O Management

This topic deals with how OS/2 provides I/O services to user programs

Introduction to OS/2 Process Management

The heart of any operating system is providing services to user programs, mostly I/O services such as reading and writing various I/O devices. OS/2 offers a wide variety of I/O services to user programs.

In most other operating systems such as UNIX, virtual I/O and other operating system services are obtained by executing a trap instruction. This instruction causes the CPU to switch into kernel mode, and start running the operating system. The operating system first saves the registers and other state information, and then looks around to see what has been requested of it. Finally, it carries out the call and returns from the trap back to user mode.

________________________________

| _ instruction _ |

| _ instruction _ |

| _ instruction _ |

| _ instruction _ | User Program

| _ instruction _ |

| +----_--- TRAP _ |

| | _ instruction _ <---+ |

|=============================|==|

| | | |

| | _____ | |

| | |__|__| | |

| | |__|__| | |

| +---> |__|__|--- System --+ | System Region

| |__|__| Call | (Operating System)

| |__|__| |

| |__|__| |

| Interrupt Vector Table |

|________________________________|

Fig ?.? Traditional system call made by trapping to the kernel

This method is used because the operating system is not part of the user program's address space. The user's virtual address space, from 0 to some maximum is filled entirely with the user program and its data. No part of the operating system is located in it.

In OS/2, in contrast, the entire operating system is part of the user's address space. It occupies address spaces from 512MB up to 4GB for every process address space. User programs may directly invoke system calls, or API function requests,located in operating system address space, without trapping to the kernel or going through any interrupt vectors.

________________________________ 4GB

| |

| | System Region

| +---- System Call ------+ | (Operating System)

|__|________________________|____|512MB

| | | |

| | _ instruction _ | |

| | _ instruction _ | |

| | _ instruction _ | |

| | _ instruction _ | | User Program

| | _ instruction _ | |

| +----_ -- CALL _ | |

| _ instruction _ -+ |

| _ instruction _ |

| _ instruction _ |

|________________________________|0

Fig ?.? OS/2 2.0 System Call convention

To prevent malicious user programs form calling operating system procedures that they have no business calling, all calls to operating system procedures use call gates.

explain call gates in 0: 32 format

Moreover, most system calls have parameters, such as the file descriptor to be used and the address of the data buffer. With the TRAP mechanism, these parameters either have to be put in registers, which is not always convenient, or on the user stack, where the kernel cannot get to them easily. Some machines have special instructions to allow the kernel to read and write user space, but these can only be called from assembly code procedures. All in all, the overhead required to make a system call via the TRAP mechanism is typically several thousand instructions.

In OS/2, the operating system is called as an ordinary procedure, so it has direct access to the parameters within its own address space. Parameter access does not cause any extra overhead, and both the user program and the operating system can be written in a high level language, such as C, without any assembly code to handle the trap or parameter transfer.

OS/2 APIs

The set of system calls provided by OS/2 is called the API (Application Program Interface). These calls define the set of virtual I/O and other instructions provided by OS/2. When Presentation Manager, OS/2's windowing system, is used, another 500 or so API calls are provided for creating, destroying, and otherwise managing windows, menus, and icons. Not all of these procedures run at ring 1, some run at ring 2 or 3.

The basic API calls are divided into four categories

+-------+------------------------+ |Name |Description | +-------+------------------------+ |DOS |Main system services | +-------+------------------------+ |VIO |Video subsystem | +-------+------------------------+ |KBD |Keyboard subsystem | +-------+------------------------+ |MOU |Mouse subsystem | +-------+------------------------+

DOS FAT File System

OS/2 File Systems

An application views a file as a logical sequence of data; OS/2 file systems manage the physical locations of that data on the storage device for the application. The file systems also manage file I/O operations and control the format of the stored information.

Applications use the OS/2 file system functions to open, read, write, and close disk files. File system functions also enable an application to use and maintain the disk that contains the files--the volumes, the directories, and the files on the disks of the computer. Applications also use OS/2 file system functions to perform I/O operations to pipes and to peripheal devices connected to the computer, like the printer.

OS/2 FAT File System

The FAT file system driver under OS/2 2.0 has been modified in order to provide improved performance and enhanced support for disk hardware devices:

- The FAT driver now supports command chaining The driver attempts to call the volume manager with a list of all contiguous sector requests required to fulfill an I/O request, thus allowing multiple page-in an page-out requests in a single logical operation.

- The FAT driver provides faster allocation of free space on the logical drive, using a bitmap to track free clusters on the disk.

Disk caching is now supported within the FAT driver, and has been removed from the device driver. A cache buffer is provided to support disk caching with the following features:

- Lazy writing

- Lazy reading on writes, that is, the ability to write to the cache and flush the cache to disk, but then to read the updated information from the cache rather than requiring the a physical disk read operation

- Asynchronous read-ahead through a multi-purpose asynchronous read thread

- Large cache size(theoretical maximum of 64MB, although practical limitations will necessitate a smaller cache)

- The ability to dynamically enable and disable the cache in response to a user command

- Bad sectors are automatically bypassed on reads

There are a number of advantages in performing caching in the FAT driver rather than the device driver; more operating system kernel services are available at this level, and intelligent read-ahead operations can more easily be performed. Lazy writing is also more easily implemented at the file system level than at the device driver level.

The FAT file system under OS/2 2.0 supports a maximum file size of 2GB. The maximum supported size for a FAT volume is also 2GB.

HPFS File System

Installable File Systems

Extended Attributes

OS/2 Device Drivers

OS/2 Presentation Manager

This topic deals with the OS/2's graphical windowing system.

Introduction to Presentation Manager

Reactive Event-Driven Paradigm

PM programs are mostly reactive. They spend most of their time in an "idle" state waiting for events to happen. When an event such as a keypress or a mouse click occurs, PM routes the input to the appropriate procedure in your code in the form of messages. The procedure can then choose to either react to the event or just return it to PM and let it handle it. If the procedure needs to display information in a window, it calls PM to do so. After making the appropriate response, the procedure returns to its idle state waiting for the next event. So, the first thing that can be said about a PM program is that it is made up of a collection of lazy, event-driven procedures that wait for work to find them.

__________ | | | | ____________ | ______\|/_____ ______________ | | | | | | | | | | | Key Pressed? |Yes->| Process Key | | _________|_________ | |______________| |______________| | | | | No | | | Wait for an event | | ______\|/_____ ______________ | | to happen. | | | | | | | | (Message arrives) | | | Mouse Moved? |Yes-| Process Mouse| | |___________________| | |______________| |______________| | | | No | | _______\|/_______ | ______\|/_____ ______________ | | | | | | | | | | Process Event | | | Timer Popped?|Yes-| Process Timer| | |_________________| | |______________| |______________| | | | No | |____________| |__________| A.) A traditional I/O polling loop B.) The event-driven way fig 3.? I/O Polling Loops Versus PM's Event-Driven Processing

Window Objects

How does PM associate procedures with events? It does that through the magic of windows. Windows in PM are more than just the familiar over-lapping of rectangles that appear on a screen. A window is a "object". It becomes "an object that knows what to do" when it teams up with an event handler procedure. A PM program is conceptually a number of windows and the stuff that makes them know what to do. Some windows look like the traditional rectangular areas typically called windows. On the other hand, structures that are not "window-like" such as scroll bars, buttons, and menus, are also considered to be windows in PM. A window may or may not visible on the screen. Most PM applications create at leat one "traditional" window called the main window, which is typically visible and represents the application to the outside world. Here is a summary of PM window terminology.

- A frame window provides a border and serves as a base for constructing composite windows, such as the main window or a dialog window. Frames give the visual unity that gives the "look and feel" to end-users.

- A main window or "standard window" is a frame window which contains windows such as a title bar, an action bar, and a scroll bar. Every main window includes a client window area. This is the area where an application does all its work.

- A dialog window is a frame window that contains one or more control windows that are used promarily to prompt the end user for input.

- A message window is a frame window that an application uses to display a message.

- A client window is the area in the window where the application displays its information. Every main window typically has a client window. The frame window receives events from the control windows that surround the client window and passes them to the client window. The application program provides the event-handler procedures that service these events.

- A control window is used in conjunction with another window to provide control structures. PM provides several pre-defined control windows such as: push buttons, entry fields, list boxes, menus, scroll bars, combo boxes, and multiple line editors(MLEs).

An application may consist of several main windows, dialog windows, and all the child windows associated with them.

Window Lineage

Every window has a parent window. A window is positioned relative to the coordinates of its parent. When a parent is hidden, so are its children. When the parent moves, its children move with it. Children are always drawn on top of the parent, and they get "clipped" if they go past the parent's borders. Windows that have the same parent are called siblings. In PM, a parent can have several children, each of which can have its own children, and so on.

Window Classes and Procedures

The "smart" element that defines a windows' behavior is the window class. This is the element that knows "what to do". Every window belongs to a window class which determines the style of the window, and specifies the event-driven procedure that will handle the messages for the window. In PM terminology, these event-driven processes are called window procedures. The window procedures are at the heart of programming in PM. They are responsible for for all aspects of a window including:

- How the window appears.

- How the window behaves and responds to state changes.

- How the window handles user input.

All user interaction with the window is passed as messages to its window procedure. When an application creates a window, it must specify a window class. The "class" tells PM which window procedure gets to process the messages generated by some window activity. Multiple windows can belong to the same class. This means that the window procedure can handle events from more than one window.

PM's Message-Based Nervous System

If windows are the heart of PM, and window procedures are its brain; then messages are its nervous system. Typically, dozens of messages will be circulating around the PM system. A message can contain information, the request for information, or a request for an action.

Even though OS/2 is a true multi-tasking operating system, PM applications must share the computer's keyboard, mouse, and screen. OS/2 places input events into PM's single system queue, which has a capacity for handling in the vicinity of 60 keystrokes or mouse clicks. Messages are read off the system queue by the PM message router on a first-in, first-out basis. The PM input router converts the system input information into a message for the application that controls the window where the event was generated. The router can communicate the message to the application in one of two ways:

- By Posting the message to the application's message queue. This is done for messages that do not require immediate action. Every PM application needs at least one message queue to receive input from the keyboard or mouse. An application can create one message queue per thread. A window is associated with the message queue of the thread that creates it. A single queue can, of course, handle more than one window. An application thread can retrieve and process the message from its queue at the appropriate time.

- By sending the message directly to the application's window procedure. This is done when PM requires immediate action from the application. The window procedure can carry out the request or pass the unprocessed messages to a default window procedure provided by PM.

The window procedure handles both queued and non-queued messages in the same manner. It doesn't care how the message got to it.

______________

_____ _____ / \

| | /| | | | / Get message \

|Mouse|--\ / |_|_|_| -------> | Perform action |

|_____| \ / Application \ Return Message /

\ / Queue \______________/

\ / Window Procedure A

____ _______ \ _____________ / _______________

| | \| | | | | | | |/ ______ / \

| Keyboard |-----|_|_|_|_|_|_|_|------| | | | / Get message \

|___________| / System Queue \ |_|_|_| ------- | Perform action |

/ \ Application \ Return Message /

________ / \ Queue \_______________/

| | / \ Window Procedure B

| Other | / \ ________________

| Events |---/ \ _____ / \

|________| \| | | | / Get message \

|_|_|_| -------| Perform action |

Application \ Return Message /

Queue \________________/

fig. 3.? PM's System Queue and the Window Procedure Queues

PM Message Etiquette

A message will not be presented to an application until the previous message has been processed. Since the majority of an application's time is spent waiting for a message to arrive, PM will allow during the interim other applications to process their respective messages. This is a form of cooperative multitasking using message processing to schedule applications. An ill-behaved application can slow down or even stop PM, if it starts a time-consuming task while processing a PM message. There is a rule-of- thumb that says 'messages must be processed within 0.1 seconds'.

Anatomy of a PM Program

A PM program consists of a main() procedure and a set of window procedures. The main() procedure allocates global data, does initialization, and creates the PM objects. It then goes into a loop waiting for messages to be placed onto its queue. When it finds a message, it dispatches it to the appropriate window procedure. When a special WM_QUIT message is received, main() cleans up afer itself and terminates the program.

All PM programs use more or less the same main() template which consists of about 40 lines of code. The heart of a PM application is its window procedures. This is the code that controls the attributes of a window and responds to the message it generates. Here is an overview:

- Connect to PM - Issue a WinInitialize call to obtain an anchor block handle from PM. This handle is used with subsequent commands.

- Load the resources - This is a good place to load the separate resources that you created using PM tools. These separate resources can be text strings, text files, binary files containing graphics, or other separate pieces of information. PM provides several specialized API calls for loading resources, such as: WinLoadString, WinLoadPtr, WinLoadDlg, etc...

- Create the message queue - Issue the WinCreateMsgQueue call to create your application's message queue and obtain a handle for it. You can specify the queue size through a parameter. The default size is 10 messages. The queue is the primary mechanism by which your application communicates with PM.

- Register the window classes - You must issue a WinRegisterClass call for every window class before creating one or more instances of a window. This call registers the window class and its associated window procedure with PM.

- Create and display the main windows - First, create all the windows that are direct children of the PM desktop. These are the most "senior" windows in your application. Issue a WinCreateStdWindow call to create a "standard" window. This call returns two handles, one that identifies the frame of the standard window, and one that identifies the frame of the standard window, and one that identifies its client area.

- Create and display the child windows - Now that you've obtained the handles of the parent windows, you can create the child windows using WinCreateStdWindow or WinCreateWindow. Continue this process until you've created all the descendant windows for your application.

- Enter the message dispatch loop - At this point, your PM program is ready to receive messages and do some work. The main program enters a "while" loop issuing WinGetMsg calls to obtain messages from PM and then turns around and issues WinDispatchMsg calls to the appropriate window procedure via PM. The program will remain in this loop until the WinGetMsg call receives a WM_QUIT message. An application can terminate its own loop by posting a WM_QUIT message to its queue.

- Get a message - A WinGetMsg call retrieves a message from the application queue. If there are no messages in the queue the WinGetMsg call blocks until a message arrives.

- Dispatch a message - A WinDispatchMsg will call the appropriate window procedure via PM and pass it the message to be processed. The window procedure has control at this point. A window procedure's code can be straightforward as in having a switch statement with a case for each message type the procedure intends to process. The real work of a PM application is done within the case statements. The messages not of interest to the window procedure, end up in the default section of the switch statement, which typically consists of handing the message over to WinDefWindowProc. This is a PM Supplied window procedure that handles all the messages that your window procedure is not interested in processing.

- Destroy all windows - A well-behaved Pm application cleans up after itself when it drops out of the loop. The WinDestroyWindow call release the window's resources.

- Destroy the message queue - The WinDestroyMsgQueue is issued to release the queue.

- Disconnect from PM - The WinTerminate call disconnects the application from PM.