Guide to Multitasking Operating Systems:Fundamentals of Operating Systems

| Guide to Multitasking Operating Systems |

|---|

An operating system is a program that acts as an intermediary between a user and the computer hardware. The purpose of an operating system is to provide an environment in which a user can execute programs in a convenient and efficient manner.

The operating system must ensure the correct operation of the computer system. To prevent user programs from interfering with the proper operation of the system, the hardware must provide appropriate mechanisms to ensure such proper behaviour.

The operating system provides certain services to programs and to the users of those programs in order to make the programming task easier.

Basically the functions of an operating system are:

- Program execution

- I/O operations

- File system

- Error detection

- Resource allocation

- Protection

Introduction to Operating Systems

The first and basic need of an OS is to overcome the idle time of the CPU. In the beginning, jobs were run one at a time with the computer operator literally operating the computer by loading time cards and pushing buttons on the computer console. As time progressed, resident monitors, the first OS's, sat in main memory and automatically transferred control from one job to the next. This reduced the idle time of the CPU between jobs but not during the execution of jobs.

With this automatic sequencing, however, the CPU is often idle. The problem is the speed of the mechanical I/O devices, which are intrinsically slower than electronic devices. Even a slow CPU works in the microsecond range, with millions of instructions executed per second. A fast disk, on the other hand, might have a response time of 15 milliseconds and a transfer rate of 1 megabits/second, on an order of a magnitude slower than a typical CPU.

The slowness of the I/O devices can mean that the CPU is often waiting for I/O. As an example, an assembler or compiler may be able to process 300 or more cards per second. A fast card reader, on the other hand, might read only 17 cards per second. This means that assembling a 1200 card program would require only 4 seconds of CPU time, but 60 seconds to read. Thus, the CPU is idle for 56 out of 60 seconds, or 93.3 percent of the time. The resulting CPU utilization is only 6.7 percent. The process is similar for output operations. The problem is that, while an I/O operation is occurring, the CPU is idle, waiting for the I/O to complete; while the CPU is executing, the I/O devices are idle.

Over time, of course, improvements in technology resulted in faster I/O devices. But CPU speeds increased even faster, so that the problem was not only unresolved, but also worsened.

Off-line Operations

One common solution was to replace the very slow card readers (input devices) and line printers (output devices) with magnetic-tape units. The majority of computer systems in the late 1950's and early 1960's were batch systems reading from card readers and writing to line printers or card punches. Rather than have the CPU read directly from cards, however, the cards were first copied onto a magnetic tape. When the tape was sufficiently full, it was taken down and carried over to the computer. When a card was needed for input to a program, the equivalent record was read from tape. Similarly, output was written to the tape and the contents of the tape would be printed later. The card readers and line printers were operated off-line, not by the main computer.

card reader ----> CPU ---- line printer a.) on-line

card reader ---- tape drives ---- CPU ---- tape drives ---- line printer b.) off-line

The main advantage of off-line operation was that the main computer was no longer constrained by the speed of the card readers and line printers, but was limited by the speed of the much faster magnetic tape units.

Buffering and Spooling

Buffering is the method of overlapping the I/O of a job with its own computation. The idea is quite simple. After data have been read and the CPU is about to start operating on them, the input device is instructed to begin the next input immediately. The CPU and the input device are then both busy. With luck, by the time that the CPU is ready for the next data item(record), the input device will have finished reading it. The CPU can then begin processing the newly read data, while the input device starts to read the following data. Similarly, this can be done for output. In this case, the CPU creates data that are put into a buffer until an output device can accept them.

Although off-line preparation of jobs continued for some time, it was quickly replaced in most systems. Disk systems became widely available and greatly improved on off-line operation. The problem with tape systems was that the card reader could not write onto one end of the tape while the CPU read from another. The entire tape had to be written before it was rewound and read. Disk systems eliminated this problem. Because the head is moved from one area of the disk to another, a disk can rapidly switch from the area of the disk being used by the card reader to store new cards to the position needed by the CPU to read the 'next' card.

In a disk system, cards are read directly from the card reader onto the disk. The location of card images is recorded in a table kept by the operating system. When a job is executed, the operating system satisfies its requests for card reader input by reading from the disk. Similarly, when the job requests the printer to output a line, that line is copied into a system buffer and is written to the disk. When the job is completed, the output is actually printed.

This form of processing is called spooling. The name is an acronym for simultaneous peripheral operation on-line. Spooling essentially uses the disk as a very large buffer, for reading as far ahead as possible on input devices and for storing output files until the output devices are able to accept them.

disk card reader CPU line printer

In addition, spooling provides a very important data structure: a job pool. Spooling will generally result in several jobs that have already been read waiting on disk, ready to run. A pool of these jobs on disk allows the operating system to select which job to run next, in order to increase CPU utilization. When jobs come in directly from tape, it is not possible to skip around and to run jobs in a different order. Jobs must be run sequentially, on a first-come, first-served basis. However, when several jobs are on a direct access device, such as a disk, job scheduling becomes possible.

The most important aspect of job scheduling is the ability to multiprogram. Off-line operation, buffering, and spooling for overlapped I/O have their limitations. A single user cannot, in general, keep either the CPU or the I/O devices busy at all times. Multiprogramming increases CPU utilization by organizing jobs so that the CPU always has something to execute.

The idea is as follows. The operating system picks and begins to execute one of the jobs in the job pool. Eventually, the job may have to wait for some task, such as a tape to be mounted, a command to be typed on a keyboard, or an I/O operation to complete. In a non-multiprogrammed system, the CPU would sit idle. In a multiprogramming system, the operating system simply switches to and executes another job. When that job needs to wait, the CPU is switched to another job, and so on. Eventually, the first job finishes waiting and gets the CPU back. As long as there is always some job to execute, the CPU will never be idle.

Multiprogrammed operating systems are fairly sophisticated. To have several jobs ready to run, the system must keep all of them in memory simultaneously. Having several programs in memory at the same time requires some form of memory management. In addition, if several jobs are ready to run at the same time, the system must choose among them. This decision is CPU Scheduling. Finally, multiple jobs running concurrently require that their ability to affect one another be limited in all phases of the operating system, including process scheduling, disk storage, and memory management.

0 ___________

| |

| monitor |

|___________|

| |

| job 1 |

|___________|

| |

| job 2 |

|___________|

| |

| job 3 |

|___________|

| |

| job 4 |

|___________|

| |

| |

512 K |___________|

Multitasking

Multitasking(or time sharing) is a logical extension of multiprogramming. Multiple jobs are executed by the CPU switching between them, but the switches occur so frequently that the users may interact with each program while it is running. To understand the difference, we will first review the batch method.

When batch systems were first developed, they were defined by the 'batching' together of similar jobs. Card- and tape-based systems allowed only sequential access to programs and data, so only one application package (the FORTRAN compiler, linker, and loader, or the COBOL equivalents, for instance) could be used at a time. As on-line disk storage became feasible, it was possible to provide immediate access to all of the application packages. Modern batch systems are no longer defined by the batching together of similar jobs; other characteristics are used instead.

A batch operating system normally reads a stream of separate jobs(from a card reader, for example), each with its own control cards that predefine what the job does. When the job is complete, its output is usually printed (on a line printer, for example). The definitive feature of a batch system is the lack of user interaction between the user and the job while that job is executing. The job is prepared and submitted. At some later time(perhaps minutes, hours, or days), the output appears. The delay between job submission and job completion(called turnaround time) may result from the amount of computing needed, or from delays before the operating system starts processing the job.

An interactive, or hands-on, computer system provides on-line communication between the user and the system. The user gives instructions to the operating system or to a program directly, and receives an immediate response. When the operating system finishes the execution of one command, it seeks the next 'control statement'. The user can easily experiment and can see results immediately.

Time-sharing systems were developed to provide interactive use of a computer system at a reasonable cost. A time-shared operating system uses CPU scheduling and multiprogramming to provide each user with a small portion of a time-shared computer. Each user has a separate program in memory. When a program executes, it typically executes for only a short time before it either finishes or needs to perform I/O. I/O may be interactive; that is, output to a display, or input from a keyboard. Five characters per second may be fairly fast for people, but it is very slow for computers. Rather than let the CPU sit idle when this happens, the operating system will rapidly switch the CPU to the program of some other user.

Time-sharing operating systems are sophisticated. They provide a mechanism for concurrent execution. Also, as in multiprogramming, several jobs must be kept simultaneously in memory, which requires some form of memory management, protection, and CPU scheduling. So that a reasonable response time can be obtained, jobs may have to be swapped in and out of main memory. Hence, disk management must also be provided. Time-sharing systems must also provide an on-line file system and protection.

System Calls

System calls provide the interface between a running program and the operating system. These calls are generally available as assembly-language instructions, and are usually listed in the manuals used by assembly-language programmers.

Some systems may allow system calls to be made directly from a higher-level language program in which case the calls normally resemble predefined function or subroutine calls. They may generate a call to a special run-time routine that makes the system call, or the system call may be generated directly in-line.

Several languages, such as C, Bliss, and PL/360, have been defined to replace assembly language for systems programming. These languages allow system calls to be made directly.

Three general methods are used to pass parameters to the operating system. The simplest approach is to pass the parameters in registers. In some cases, however, there may be more parameters than registers. In these cases, the parameters are generally stored in a block or table in memory and the address of the block is passed as a parameter in a register. Parameters may also be placed, or pushed, onto the stack by the program, and popped off the stack by the operating system.

System calls can be roughly grouped into five major categories: process control, file manipulation, device manipulation, information maintenance, and communications

- Process Control

- End, Abort

- Load, Execute

- Create Process, Terminate Process

- Get Process Attributes, Set Process Attributes

- Wait for Time

- Wait Event, Signal Event

- Allocate Memory, Free Memory

- File Manipulation

- Create File, Delete File

- Open, Close

- Read, Write, Reposition

- Get File Attributes, Set File Attributes

- Device Manipulation

- Request Device, Release Device

- Read, Write, Reposition

- Get Device Attributes, Set Device Attributes

- Logically Attach or Detach Devices

- Information Maintenance

- Get Time or Date, Set Time or Date

- Get System Data, Set System Data

- Get Process, File, or Device Attributes

- Set Process, File, or Device Attributes

- Communications

- Create, Delete Communication Connection

- Send, Receive Messages

- Transfer Status Information

System Structure

Process Management

This topic deals with handling the many programs that may be in main memory at once.

Introduction to Process Management

A process is a program in execution. In general, a process will need certain resources-such as CPU time, memory, files, and I/O devices-to accomplish its task. These resources are allocated to the process either when it is created, or while it is executing.

A process is the unit of work in most systems. Such a system consists of a collection of processes: operating system processes execute system code, and user processes execute user code. All of these processes can potentially execute concurrently.

The operating system is responsible for the following activities in connection with process management: the creation and deletion of both user and system processes; the scheduling of processes, and the provision of mechanisms for synchronization, communication, and deadlock handling for processes.

A process is more than the program code plus the current activity. A process generally also includes the process stack containing temporary data(such as subroutine parameters, return addresses, and temporary variables), and a data section containing global variables.

Note : A program by itself is not a process; a program is a passive entity, such as the contents of a file stored on disk, whereas a process is an active entity, with a program counter specifying the next instruction to execute.

Process State

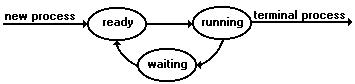

As a process executes, it changes state. The state of a process is defined in part by that process's current activity. Each process may be in one of three states:

Figure: Process State Diagram

- Running. Instructions are being executed.

- Waiting. The process is waiting for some event to occur(such as an I/O completion).

- Ready. The process is waiting to be assigned to a processor

These names are rather arbitrary, and vary between operating systems. The states they represent are found on all systems, however. It is important to realize that in a single-processor system, only one process can be running at any instant. Many processes may be ready and waiting, however.

Process Control Block

Each process is represented in the operating system by its own process control block(PCB). A PCB is a data block or record containing many pieces of the information associated with a specific process, including:

- Process State. The state may be new, ready, running, waiting, or halted.

- Program Counter. The counter indicates the address of the next instruction to be executed for this process.

- CPU State. This includes the contents of general purpose registers, index registers, stack pointers, and any condition-code information. Along with the program counter, this state information must be saved when an interrupt occurs, to allow the process to be continued correctly afterwards.

- CPU Scheduling Information. This information includes a process priority, pointers to scheduling queues, and any other scheduling parameters.

- Memory-management information. This information includes limit registers or page tables.

- I/O Status Information. The information includes outstanding I/O requests and a list of open files.

The PCB serves as the repository for any information that may vary from process to process.

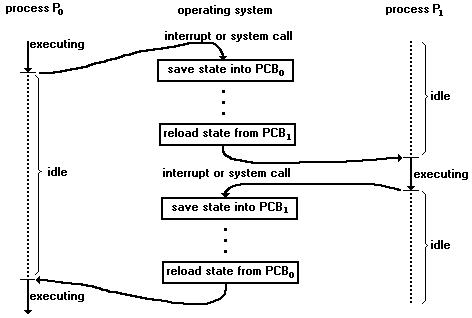

Figure: The CPU can be switched from process to process

Concurrent Processes

The processes in the system can execute concurrently; that is, many processes may be multitasked on a CPU. There are several reasons for allowing concurrent execution:

- Physical Resource Sharing. Since the computer hardware resources are limited, we may be forced to share them in a multi-user environment.

- Logical Resource Sharing. Since several processes may be interested in the same piece of information(for instance, a shared file), we must provide an environment to allow concurrent processes access to these types of resources.

- Computation speedup. If we want a particular task to run faster, we may break it into sub-tasks, each of which will be executing in parallel with the others. Notice that such a speed up can be achieved only if the computer has multiple processing elements(such as CPUs or channels)

- Modularity. We may want to construct the system in a modular fashion, dividing the system functions into separate processes.

- Convenience. Even an individual user may have many tasks to work on at one time. For instance, a user may be editing, printing, and compiling in parallel.

Concurrent execution that requires cooperation among the processes requires a mechanism for process synchronization and communication.

Threads

Recall that a process is defined by the resources it uses and by the location at which it is executing. There are many instances, however, in which it would be useful for resources to be shared and accessed concurrently. This situation is similar to the case where a fork system call is invoked with a new thread of control executing within the same virtual address space. This concept is so useful that several new operating systems are providing a mechanism to support it through the thread facility. A thread is a basic unit of CPU utilization. It has little non-shared state. A group of peer threads share code, address space, and operating system resources. The environment in which a thread executes is called a task. A traditional(heavyweight) process is equal to a task with one thread. A task does nothing if no threads are in it, and a thread must be in exactly one task. An individual thread has at least its own register state, and usually its own stack, The extensive sharing makes CPU switching among peer threads and threads' creation inexpensive, compared with context switches among heavyweight processes. Thus, blocking a thread and switching to another thread is a reasonable solution to the problem of how a server can efficiently handle many requests.

Threads are gaining in popularity because they have some of the characteristics of heavyweight processes but can execute more efficiently. There are many applications where this combination is useful. For instance, the UNIX kernel is single-tasking; Only one task can be executing in the kernel at a time. Many problems, such as synchronization of data access(locking of data structures while they are being modified) are avoided because only one process is allowed to be doing the modification. Mach, on the other hand, is multithreaded, allowing the kernel to service many requests simultaneously. In this case, the threads themselves are synchronous: another thread in the same group may run only if the currently executing thread relinquishes control. OF course, the current thread would only relinquish control only when it was not modifying shared data. On systems on which threads are asynchronous, some explicit locking mechanism must be used, just as in systems where multiple processes share data.

Scheduling Concepts

The objective of multiprogramming is to have some process running at all times, to maximize CPU utilization. The idea is quite simple. A process is executed until it must wait, typically for the completion of some I/O request. In a simple computer system, the CPU would normally sit idle while the process waited for the completion of the event. In a multiprogramming system, several processes are kept in memory at a time. When one process has to wait, the operating system takes the CPU away from that process and gives it to another process. This pattern continues. Every time one process has to wait, another process may take over use of the CPU.

The benefits of multiprogramming are increased CPU utilization and higher throughput, which is the amount of work accomplished in a given time interval.

Scheduling Queues

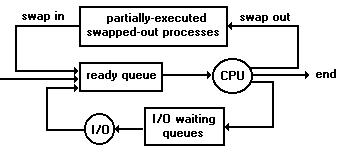

As processes enter the system, they are put into a job queue. This queue consists of all processes residing on mass storage awaiting allocation of main memory. The processes that are residing in main memory and are ready and waiting to execute are kept on a list called the ready queue. This list is generally a linked-list. A ready-queue header will contain pointers to the first and last process control blocks in the list. Each PCB has a pointer field that points to the next process in the ready queue.

There are also other queues in the system. When a process is allocated the CPU, it executes for a while and eventually either quits or waits for the occurrence of a particular event, such as the completion of an I/O request. In the case of an I/O request, such a request may be to disk. Since there are many processes in the system, the disk may be busy with the I/O request of some other process. Thus, the process may have to wait for the disk. The list of processes waiting for a particular I/O device is called a device queue. Each device has its own device queue.

A common representation for a discussion of process scheduling is a queueing diagram, shown below. Each rectangular box represents a queue. Two types of queues are present: the ready queue and a set of device queues. The circles represent the resources that serve the queues, and the arrows indicate the flow of processes in the system.

Figure: Queueing Diagram

A new process is initially put into the ready queue. It waits in the ready queue until it is selected for execution and is given the CPU. Once the process is allocated the CPU and is executing, one of several events could occur:

- The process could issue an I/O request, and then be placed in an I/O queue.

- The process could create a new process and wait for its termination.

- The process could be forcibly removed from the CPU, as a result of an interrupt, and be put back into the ready queue.

In the first two cases, the process eventually switches from the waiting state to the ready state and is then put back into the ready queue. A process continues this cycle until it terminates, at which time it exits from the system.

Schedulers

A process migrates between the various scheduling queues throughout its lifetime. The operating system must select processes from these queues in some fashion. The selection process is carried out by the appropriate scheduler.

In a batch system, there are often more processes submitted than can be executed immediately. These processes are spooled to a mass storage device (typically a disk), where they are kept for later execution. The long- term scheduler (or job scheduler) selects processes from this pool and loads them into memory for execution. The short-term scheduler (or CPU scheduler) selects from among the processes that are ready to execute, and allocates the CPU to one of them.

The primary distinction between these two schedulers is the frequency of their execution. The short-term scheduler must select a new process for the CPU quite often. A process may execute for only a few milliseconds before waiting for an I/O request. Often, the short-term scheduler executes at least once every 10 milliseconds. Because of the short duration of time between executions, the short-term scheduler must be very fast. If it takes 1 millisecond to decide which process to execute for 10 milliseconds, then 1/(10 + 1) = 9 percent of the CPU is being used simply for scheduling the work(overhead).

The long-term scheduler, on the other hand, executes much less frequently. There may be minutes between creation of new processes in the system. The long-term scheduler controls the degree of multiprogramming(the number of processes in memory). If the degree of multiprogramming is to be stable, then the average rate of process creation must be equal to the average departure rate of processes leaving the system. Thus, the long-term scheduler may need to be invoked only when a process leaves the system. Because of the longer interval between executions, the long-term scheduler can afford to take more time to decide which process should be selected for execution.

On some systems, the long-term scheduler may be absent or minimal. For example, time-sharing systems often have no long-term scheduler, but simply put every new process in memory for the short-term scheduler. The stability of these systems depend either on a physical limitation or on the self-adjusting nature of human users.

Some operating systems, such as time-sharing systems, may introduce an additional, intermediate level of scheduling. This medium-term scheduler is diagrammed below. The key behind a medium-term scheduler is that it sometimes it can be advantageous to remove processes from memory (and from active contention of the CPU) and thus to reduce the degree of multiprogramming. At some later time, the process can be reintroduced into memory and its execution can be continued where it left off. This scheme is often called swapping. The process is swapped out and swapped in later by the medium-term scheduler. Swapping may be necessary to improve the process mix, or because a change in memory requirements has overcommitted available memory, requiring memory to be freed up.

CPU Scheduling

Scheduling is a fundamental operating system function. Almost all computer resources are scheduled before use. The CPU is, of course, one of the primary computer resources. Thus, its scheduling is central to operating system design.

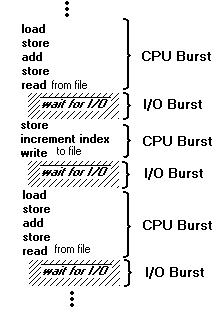

CPU-I/O Burst Cycle

The success of CPU scheduling depends on the following observed property of processes: Process execution consists of a cycle of CPU execution and I/O wait. Processes alternate back and forth between these two states. Process execution begins with a CPU burst. That is followed by an I/O burst, which is followed by another CPU burst, then another I/O burst, and so on. Eventually, the last CPU burst will end with a system request to terminate execution, rather with another I/O burst.

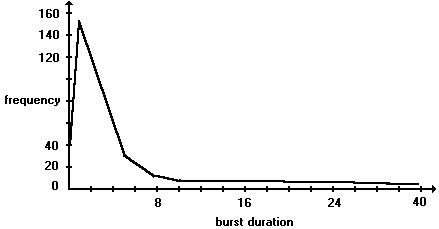

The durations of these CPU bursts have been measured. Although they vary greatly from process to process and computer to computer, they tend to have a frequency curve similar to that of below.

The curve is generally characterized as exponential or hyperexponential, There is a very large number of very short CPU bursts, and there is a small number of very long CPU bursts. An I/O bound program would typically have many very short CPU bursts. A CPU bound program might have a few very long CPU bursts. This distribution can be quite important in selecting an appropriate CPU scheduling algorithm.

Whenever the CPU becomes idle, the operating system must select one of the processes in the ready queue to be executed. The selection process is carried out by the short-term scheduler (or CPU scheduler). The scheduler selects from among the processes in memory that are ready to execute, and allocates the CPU to one of them.

Note that the ready queue is not necessarily a first-in-first-out(FIFO) queue. As we shall see when we consider the various scheduling algorithms, a ready queue may be implemented as a FIFO queue, a priority queue, a tree, or simply an unordered linked-list. Conceptually, however, all the processes in the ready queue are lined up waiting for a chance to run on the CPU. The records in the queues are generally PCB's of the processes.

Scheduling Structure

CPU scheduling decisions may take place under the following four circumstances:

- When a process switches from the running state to the waiting state (for example, I/O request), invocation of wait for the termination of one of the child processes)

- When a process switches from the running state to the ready state (for example, when an interrupt occurs)

- When a process switches from the waiting state to the ready state (for example, completion of I/O)

- When a process terminates

For circumstances 1 and 4, there is no choice in terms of scheduling. A new process(if one exists in the ready queue) must be selected for execution. This, however, is not the case for circumstances 2 and 3.

When scheduling takes place only under circumstances 1 and 4, we say the scheduling scheme is non-preemptive; otherwise, the scheduling scheme is preemptive. Under non-preemptive scheduling, once the CPU has been allocated to a process, the process keeps the CPU until it releases the CPU either by terminating or by switching to the waiting state.

Context-Switch

Switching the CPU to another process requires saving the state of the old process and loading the saved state for the new process. This task is known as a context-switch. Context-switch time is pure overhead. It varies from machine to machine, depending on the memory speed, the number of registers, and the existence of special instructions(such as a single instruction to load or store all registers). Typically, it ranges from 1 to 100 microseconds. Also, the more complex the operating system, the more work must be done during a context-switch.

Dispatcher

Another component involved in the CPU scheduling function is the dispatcher. The dispatcher is the module that actually gives control of the CPU to the process selected by the short-term scheduler. This function involves:

- Switching context

- Switching to user mode

- Jumping to the proper location in the user program to restart that program

Obviously, the dispatcher should be as fast as possible.

Scheduling Algorithms

CPU scheduling deals with the problem of deciding which of the processes in the ready queue is to be allocated to the CPU. There are many different CPU scheduling algorithms. These algorithms have different properties and may favour one class of processes over another. In choosing which algorithm to use in a particular situation, we must consider the properties of the various algorithms.

Many criteria have been suggested for comparing CPU scheduling algorithms. Which characteristics are used for comparison can make a substantial difference in the determination of the best algorithm. Criteria that are used include the following:

- CPU utilization We want to keep the CPU as busy as possible. CPU utilization may range from 0 to 100 percent. In a real system, it should range from 40 percent(for a lightly loaded system) to 90 percent (for a heavily used system).

- Throughput If the CPU is busy, then work is being done. One measure of work is the number of processes that are completed per time unit, called throughput.

- Turnaround time From the point of view of a particular process, the important criterion is how long it takes to execute that process. The interval from the time of submission to the time of completion is called turnaround time. Turnaround time is the sum of all the periods spent waiting to get into memory, waiting in the ready queue, executing on the CPU, and doing I/O.

- Waiting time The CPU scheduling algorithm does not really affect the amount of time during which a process executes or does I/O. The algorithm affects only the amount of time that a process spends waiting in the ready queue. Thus, rather than looking at turnaround time, we might simply consider the waiting time for each process.

- Response time In an interactive system, turnaround time may not be the best criterion. Often, a process can produce some output fairly early and can continue computing new results while previous results are being output to the user. Thus, another measure is the time from the submission of a request until the first response is produced. This measure, called response time, is the amount of time it takes to start responding, but not the time it takes output that response. The turnaround time is generally limited by the speed of the output device.

It is desirable to maximise CPU utilization and throughput, and to minimize turnaround time, waiting time, and response time. In most cases, we optimize the average measure. However, it may sometimes be desirable to optimize the minimum or maximum values, rather than the average.

First-Come, First-Served Scheduling

By far the simplest CPU scheduling algorithm is the first-come, first-served (FCFS) algorithm. With this scheme, the process that requests the CPU is allocated the CPU first. The implementation of the FCFS policy is easily managed with a First-In-First-Out(FIFO) queue. When a process enters the ready queue, its PCB is linked onto the tail of the queue. When the CPU is free, it is allocated to the process at the head of the ready queue. The FCFS scheduling is simple to write and understand.

The FCFS scheduling algorithm is nonpreemptive. Once the CPU has been allocated to a process, that process keeps the CPU until it wants to release the CPU, either by terminating or by requesting I/O. The FCFS algorithm is particularly troublesome for time-sharing systems. where it is important that each user get a share of the CPU at regular intervals. It would be disastrous to allow one process to keep the CPU for an extended period.

Shortest-Job-First Scheduling

A different approach to CPU scheduling is the shortest-job-first(SJF) algorithm.(The term shortest process first is not used because most people and textbooks refer to this type of scheduling discipline as shortest-job-first) This algorithm associates with each process the length of the next CPU burst. When the CPU is available, it is assigned to the process that has the next smallest CPU burst. If two processes have the same length CPU burst, FCFS scheduling is used to break the tie.

The SJF algorithm is provably optimal, in that it gives the minimum average waiting time for a given set of processes. Given two processes, with one having a longer execution time than the other, it can be shown that moving a short process before a long process decreases the waiting time of the long process. Consequently the average waiting time decreases.

The real difficulty with the SJF algorithm is knowing the length of the next CPU request. For a long-term(job) scheduling in a batch system, we can use the process time limit. Thus, users are motivated to estimate the process time limit accurately, since a lower value may mean faster response. (Too low a value will cause a "time-limit-exceeded" error and require resubmission.) SJF scheduling is used frequently in process scheduling.

Although the SJF algorithm is optimal, that is, not other algorithm can deliver better performance, it cannot be implemented at the level of short-term CPU scheduling. There is no way to know the length of the next CPU burst. One approach is to try to approximate SJF scheduling. We may not know the length of the next CPU burst, but we may be able to predict its value. We expect that the next CPU burst will be similar in length to the previous ones. Thus, by computing an approximation of the length of the next CPU burst, we can pick the process with the shortest predicted CPU burst.

The SJF algorithm may be either preemptive or non-preemptive. The choice arises when a new process arrives at the ready queue while a previous process is executing. The new process may have a shorter next CPU burst than what is left of the currently executing process. A preemptive SJF algorithm will preempt the currently executing process, whereas a non-preemptive SJF algorithm will allow the currently running process to finish its CPU burst. Preemptive SJF scheduling is sometimes called shortest-remaining-time-first scheduling.

Priority Scheduling The SJF algorithm is a special case of the general priority scheduling algorithm. A priority is associated with each process, and the CPU is allocated to the process with the highest priority. Equal-priority processes are scheduled in FCFS order.

An SJF algorithm is simply a priority algorithm where the priority(p) is the inverse of the (predicted) next CPU burst(t ): p = 1/t. The larger the CPU burst, the lower the priority and vice versa.

Note that we discuss scheduling in terms of high and low priority. Priorities are generally some fixed range of numbers, such as 0 to 7, or 0 to 4095. However, there is no general agreement on whether 0 is the highest or lowest priority. Some systems use low numbers to represent low priority, others use low numbers for high priority. This difference can lead to confusion. In the text, we assume that low numbers represent high priority.

Priority scheduling can be either preemptive or non-preemptive. When a process arrives at the ready queue, its priority is compared with the priority of the currently running process. A preemptive priority scheduling algorithm will preempt the CPU if the priority of the newly arrived process is higher than the priority of the currently running process. A non-preemptive priority scheduling algorithm will simply put the new process at the head of the ready queue.

A major problem with priority scheduling algorithms is indefinite blocking or starvation A process that is ready to run but lacking the CPU can be considered blocked, waiting for the CPU. A priority scheduling algorithm can leave some low-priority processes waiting indefinitely for the CPU. In a heavily loaded computer system, a steady stream of higher-priority processes can prevent a low-priority process from ever getting the CPU. Generally, one of two things will happen. Either the process will eventually be run(At 2 A.M. Sunday, when the system is finally lightly loaded) or the computer system will eventually crash and lose all unfinished low-priority processes.

A solution to the problem of indefinite blockage of low-priority processes is ageing. Ageing is a technique of gradually increasing the priority of processes that wait in the system for a long time. For example, if priorities range from 0(high) to 127(low), we could decrement a waiting process's priority by 1 every minute. Eventually, even a process with an initial priority of 127 would have the highest priority in the system and would be executed. In fact, it would take no more than 2 hours and 7 minutes for a priority 127 process to age to a priority 0 process.

Round-Robin Scheduling

The round-robin(RR) scheduling algorithm is designed especially for time-sharing systems. A small unit of time, called a time quantum or time-slice is defined. A time quantum is generally from 10 to 100 milliseconds. The ready queue is treated as a circular queue. The CPU scheduler goes around the ready queue, allocating the CPU to each process for a time interval of up to 1 time quantum.

To implement RR scheduling, we keep the ready queue as a first-in, first-out (FIFO) queue of processes. New processes are added to the tail of the ready queue. The CPU scheduler picks the first process, sets a timer to interrupt after 1 time quantum, and dispatches the process.

One of two things will then happen. The process may have a CPU burst of less than 1 time quantum. In this case, the process itself will release the CPU voluntarily. The scheduler will then proceed to the next process in the ready queue. Otherwise, it the CPU burst of the currently running process is greater than 1 time quantum, the timer will go off and will cause an interrupt to the operating system. A context switch will be executed, and the process will be put at the tail of the ready queue. The CPU scheduler will then select the next process from the ready queue.

In the RR scheduling algorithm, no process is allocated the CPU for more than 1 time quantum in a row. If a process's CPU burst exceeds 1 time quantum, that process os preempted and is put back in the ready queue. The RR scheduling algorithm is inherently preemptive.

The performance of the RR algorithm depends heavily on the size of the time quantum. At one extreme, if the time quantum is very large(infinite), the RR policy is the same as the FCFS policy. If the time quantum is very small(say 10 milliseconds), the RR approach is called processor sharing, and appears(in theory) to the users as though each of n processes has its own processor running at 1/n the speed of the real processor.

For operating systems, we need to consider the effect of context switching on the performance of RR scheduling. Let use assume that we have only 1 process of 10 time units. If the quantum is 12 time units, the process finishes in less than 1 time quantum, with no overhead. If the quantum is 6 time units, however, the process will require 2 quanta, resulting in a context switch. If the time quantum is 1 time unit, then 9 context switches will occur, slowing the execution of the process accordingly.

Thus, we want the time quantum to be large with respect to the context switch time. If the context switch time is approximately 5 percent of the time quantum, then about 5 percent of the CPU time will be spent in context switch.

Summary of CPU Scheduling implementations

| FCFS | inherently non-preemptive |

| SJF | preemptive or non-preemptive |

| Priority | preemptive or non-preemptive |

| Round-Robin | inherently preemptive |

Multilevel Queue Scheduling

Another class of scheduling algorithms has been created for situations in which classes of processes are easily classified into different groups. For example, a common division is made between foreground (interactive) processes and background(batch) processes. These two types of processes have quite different response-time requirements, and so might have different scheduling needs, In addition, foreground processes may have priority(externally defined) over background processes.

A multilevel queue scheduling algorithm partitions the ready queue into separate queues as shown below. Processes are permanently assigned to one queue, generally based on some property of the process, such as memory size or process type. Each queue has its own scheduling algorithm. For example, separate queues might be used for foreground and background processes. The foreground process queue might be scheduled by a RR algorithm, while the background queue is scheduled by an FCFS algorithm. In addition, there must be scheduling between the queues. This is commonly a fixed-priority preemptive scheduling. For example, the foreground queue may have an absolute priority over the background queue.

Multilevel Feedback Queue Scheduling

Normally, in a multilevel queue scheduling algorithm, processes are permanently assigned to a queue on entry to the system. Processes do not move between queues. If there are separate queues for foreground and background processes, for example, processes do not move from one queue to the other, since processes do not change their foreground or background nature. This setup has the advantage of low scheduling overhead, but is inflexible.

Multilevel feedback queue scheduling, however, allows a process to move between queues. The idea is to separate processes with different CPU- burst characteristics. If a process uses too much CPU time, it will be moved to a lower priority queue. This scheme leaves I/O-bound and interactive processes in the higher priority queues. Similarly, a process that waits too long in a lower-priority queue may be moved to a higher-priority queue. This is a form of ageing that would prevent starvation.

In general, a multifeedback queue scheduler is defined by the following parameters:

- The number of queues

- The scheduling algorithm for each queue

- The method used to determine when to upgrade a process to a higher- priority queue

- The method used to determine when to demote a process to a lower-priority queue

- The method used to determine which queue a process will enter when that process needs service

The definition of a multilevel feedback queue scheduler makes it the most general CPU scheduling algorithm. It can be configured to match a specific system under design. Unfortunately, not also requires some means of selecting values for all the parameters to define the best scheduler. Although a multilevel feedback queue is the most general scheme, it is also the most complex.

Process Coordination

Concurrent processing is the basis of multiprogrammed operating systems. Concurrent systems consist of a collection of processes: operating systems execute system code, and user processes execute user code. All these processes can potentially execute concurrently. To ensure orderly execution, the system must provide mechanisms for process synchronization and communication.

Producer-consumer processes are common in operating systems. A producer process produces information that is consumed by a consumer process. For example, a print program produces characters that are consumed by a printer driver. A compiler may produce assembly code, which is consumed by an assembler. The assembler, in turn, may produce object modules, which are consumed by the loader.

To allow producer and consumer processes to run concurrently, we must create a pool of buffers that can be filled by the producer and emptied by the consumer. A producer can produce into one buffer while the consumer is consuming from another buffer. The producer and consumer must be synchronized, so that the consumer does not try to consume items that have not yet been produced. In this situation, the consumer must wait until an item is produced.

Memory Management

This topic deals with managing the memory of the many programs that may be running at once.

Introduction to Memory Management

In Process Management, it was showed how the CPU can be shared by a set of processes. As a result of CPU scheduling, we can improve both the utilization of the CPU and the speed of the computer's response to its users. To realize this increase in performance, however, we must keep several processes in memory; we must share memory.

Memory is central to the operation of a modern computer system. Memory is a large array of words, or bytes, each with its own address. Interaction is achieved through a sequence of reads or writes to specific memory addresses. The CPU fetches from and stores to, memory. A typical instruction cycle, for example, will first fetch an instruction from memory. The instruction is then decoded and may cause operands to be fetched from memory. After the instruction on the operands has been executed, results may be stored back into memory. Notice that the memory unit only sees a stream of memory addresses; it does not know how they are generated(the instruction pointer, indexing, and so on) or what they are for(instructions or data). Accordingly, we can ignore how a memory address is generated by a program. We are interested in only the sequence of memory addresses generated by the running program.

Address Binding

To reference any particular memory address, one needs a location of that memory address. These are physical addresses. In programs, the memory locations that are referenced are called logical addresses because an address specified by a program may or may not be the correct physical address. There are basically three different ways that logical addresses are mapped to physical addresses, which is called address binding. These address binding techniques depend on the memory management methods that the OS of your computer uses.

Compile-time address binding generates absolute code, in other words, the addresses are already in place, and when the program is run,the logical addresses or either absolute where logical locations 000 - 999 actually correspond to physical locations 000 - 999, or where an offset is added to the logical locations to generate the physical locations. For example, in a simple system, the OS resides in physical locations 000 - 500. Any program would have to start at location 501. A program that has the logical address space of 000 - 999 would be modified so that logical locations 000 - 999 would be changed to correspond to physical locations 501 - 1500. It is known at compile-time where the program will reside in memory. If the program had to be loaded into a different starting location, then the program would have to be re-compiled. Modern computer systems do not use this method because it isn't very flexible.

Load-time address binding If the starting location of the program isn't known at compile time of the program, then the compiler will generate re-locatable code, i.e. the locations would be changed during the loading of the program Using the above example, at load-time, when the system does know where the program will be put at, then the load-time binding would map logical locations 000 - 999 to physical locations 501 - 1500 , or 1000 - 1999, depending on the circumstances at load-time. DOS programs are run in this fashion.

Run-time address binding If the starting location of the program is to be moved during execution of the program, then the binding must be delayed until run-time. Physical addresses are generated during execution by adding the value of logical addresses to an offset register. Using the above example, the program would have logical locations 000 - 999, but then at run-time, the value of the logical address would be added with the value of an offset register, in this case 501, to reference the physical locations 501 - 1500. When the base location of the program is moved from501 to, say 1001, then the value of the offset register is changed from 501 to 1001.

Dynamic Loading/Linking

Overlays

A problem that many computer systems were getting in the past, was that the logical address spaces of programs had to be less than the physical address spaces of the computers they were running on. To overcome this, overlay techniques were introduced so that only a necessary part of a program could be brought into memory.time. When the next part of the program is needed, it is brought into the address space occupied by a part of the program that is no longer necessary. In other words, it replaces the previous part of the program.

Overlay techniques did not need any special support from the OS. The trouble with overlays, however, was that special relocation and linking algorithms were needed. The programmer, in essence, had the burden of managing memory for the system. In 1961, a method was proposed for performing the overlay process automatically without the programmer even knowing it was happening. This method, now called Virtual Memory, had the obvious advantage of freeing the programmer from a lot of annoying bookkeeping.

Swapping

Paging

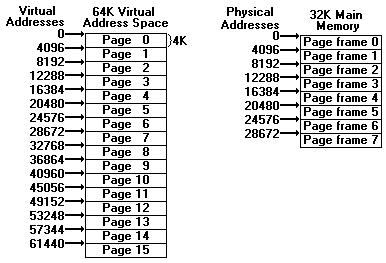

One essential requirement for virtual memory is a secondary memory in which to keep the program. Do not confuse this with the program file which may be located on the same disk. The virtual address space is broken up into a number of equal-sized chunks called pages. Page sizes range from 512 bytes to 4K. The physical address space is broken up into pieces in a similar way, each piece being the same size as a page, so that each piece of main memory is capable of holding exactly one page. These pieces are called page-frames. In typical systems, there may be thousands of page-frames present. The information concerning the mapping of virtual addresses to physical addresses is kept in a page table.

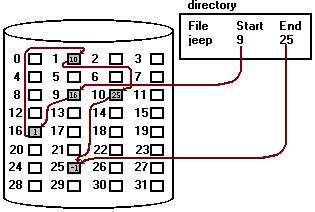

fig. 2. Allocation of pages and page-frames in an example system.

In this example, programs generate 16-bit addresses as they normally would, except that they are no longer physical addresses, but virtual addresses. The 16-bit virtual address is divided into a 4-bit virtual page number and a 12-bit address within the selected page.

virtual ___________________________________________

address | | physical

__________|address within ________\|/_____ address

|0010|000000001111| |111|000000001111|

|_P__|_____D______| |_F_|_____D______|

|virtual |page

|page 2 |frame 7

| |

| |

| Page Table |

| |

| page __________________ |

| 0 |x|yyyyyyyyyyyy|zzz| |

| |_|____________|___| |

| 1 |0|000000000000|n/a| |

| |_|____________|___| |

--------------->2 |1| n/a |111|------

|_|____________|_F_|

. legend:

. P - Page number

. D - displacement from page

zzz __________________ base address

2 - 1 | | | | F - Page frame number

|_|____________|___|

x - present/not present bit

y...y - secondary memory addr.

zzz - page frame number(F)

fig 2.3 Simple overview of paging

Virtual Memory

This topic deals with the use of secondary storage for simulating the actual main memory of a computer system.

Introduction to Virtual Memory

The idea is to separate the concepts of address space and memory locations. Consider an example if a computer with a 16-bit address field in its instructions and 4096 bytes of memory. A program on this computer can address 65536 bytes of memory. The reason is that 65536(2^16) 16-bit addresses exist. The number of addressable words depends only on the number of bits in an address and is in no way related to the number of bytes actually available. The address space for this computer consists of the numbers 0,1,2, ... ,65535, because that is the set of possible addresses.

Before virtual memory, people would have made a distinction between the addresses below 4096 and those equal to or above 4096. These two were regarded as the useful address space and the useless address space(as addresses above 4095 didn't correspond to actual memory addresses), respectively. In these cases, there was a one-to-one correspondence between address space and actual memory addresses.

The idea of separating the address space and the memory addresses is as follows: At any instant of time, 4096 bytes of memory can be directly addressed but they need not correspond to addresses 0 to 4095. We could, for example, "tell" the computer henceforth whenever address 4096 is referenced, memory word 0 is to be used. Whenever address 4097 is referenced, memory word 1 is to be used; whenever address 8191 is referenced, memory word 4095 is to be used, and so forth. In other words, we have a mapping from the address space onto the actual memory addresses, as shown below.

Addresses 64K address space

________________

0 | |

| | 4K main memory

|________________| ________________

4096 | | ---------------->| | 0

| | | |

8191 |________________| ---------------- |________________| 4095

| |

| |

| |

| |

| |

| |

| |

| |

| |

| |

| |

65535 |________________|

fig. 2.4 Mapping in which addresses 4096 to 8191 are mapped onto main memory addresses 0 to 4095.

On a machine without virtual memory, if a program attempted to jump to an address between greater than physical memory present, a trap would occur, saying something like, "Non-existant memory location" and the program would then be terminated. On a machine with virtual memory, the following steps would occur to reference an address between 8192 and 12287:

- The contents of main memory would be saved to secondary memory(disk).

- Bytes 8192 to 12287 would be located in secondary memory.

- Bytes 8192 to 12287 would be loaded into main memory.

- The address map would be changed to map addresses 8192 to 12287 onto memory locations 0 to 4095.

- Execution would continue as though nothing had happened.

This technique for automatic overlaying is called paging and the chunks of program read in from secondary memory are called pages. For emphasis, the addresses that the programs refer to will be called the virtual address space, and the actual, hardwired memory addresses the physical address space. A memory map relates virtual addresses to physical addresses. We presume that there is enough room in secondary memory(disk) to store the whole program and its data.

Programs are written just as though there were enough main memory for the whole virtual address space, even though such is not the case. Programs may then load from, store into, or jump to any word in the virtual address space, without regard to the fact that there really is not enough physical memory. To the programmer, the paging mechanism is said to be transparent.

Demand Paging

In the above example, the page was in main memory. However, that assumption will not always be true because there is not enough room in main memory for all the virtual pages. When a reference is made to an address on a page not present in main memory, it is called a page fault. After a page fault has occurred, it is necessary for the operating system to read in the required page from secondary memory, enter its new physical memory location in the page table, and then repeat the instruction that caused the fault.

It is possible to start a program running on a machine with virtual memory even when none of the program is in main memory. The page table merely has to be set to indicate that each and every virtual page is in the secondary memory and not in main memory. When the CPU tries to fetch the first instruction, it immediately gets a page fault, which causes the page containing the first instruction to be loaded and entered in the page table. Then the first instruction can begin. If the first instruction has two addresses(as operands), with the two addresses on different pages, both different from the instruction page, two more page faults will occur, and two more pages will be brought in before the instruction can finally execute. The next instruction may possibly cause more page faults, and so forth.

This method of operating a virtual memory is called pure demand paging. In pure demand paging, pages are brought in only when an actual request for a page occurs, not in advance. This method ensures that only the pages that are needed by a program are in memory. Pages that contain code for error handling or other infrequently used routines, which may or may not be needed for the smooth running of a program, are kept out of the valuable main memory and in secondary memory until needed. Most operating systems do not use pure demand paging in the strictest sense, but rather, model after it. Such as, having attributes associated with sections of code or data of a program that tell the operating system when to load. Thus, the code for the first n pages of a program might have been flagged to be loaded when the program is executed in order to drastically reduce the number of page faults that might have occurred under pure demand paging.

Page Replacement

Up to now, we have assumed that there is always a vacant page frame in which to put the newly loaded page. In general, this will not be true, and it will be necessary to remove some page(i.e. copy it back to the secondary memory) to make room. Thus, an algorithm is needed to decide which page is to be removed.

Choosing a page to remove at random is certainly not a good idea. Most operating systems try to predict which of the pages in memory is the least useful in the sense that its absence would have the smallest adverse effect on the running program. One way of doing so is to make a prediction when the next reference to each page will occur and remove the page whose predicted next reference lies furthest in the future. In other words, rather than evict a page that will be needed shortly, try to select one that will not be needed for a long time.

One popular algorithm evicts the page least recently used because the probability of its not being in the current working set is high. It is called the Least Recently Used(LRU) algorithm. LRU replacement associates with each page the time of that page's last use. When a page must be replaced, LRU chooses that page that has not been used for the longest period of time.

Reference String (page no.'s) 7 0 1 2 0 3 0 4 2 3 0 3 2 1 2 0 1 7 0 1 _ _ _ _ _ _ _ _ _ _ _ _ |7| |7| |7| |2| |2| |4| |4| |4| |0| |1| |1| |1| |-| |-| |-| |-| |-| |-| |-| |-| |-| |-| |-| |-| | | |0| |0| |0| |0| |0| |0| |3| |3| |3| |0| |0| |-| |-| |-| |-| |-| |-| |-| |-| |-| |-| |-| |-| | | | | |1| |1| |3| |3| |2| |2| |2| |2| |2| |7| - - - - - - - - - - - - page frames

fig ?.? LRU Page Replacement Algorithm

Thrashing

If the working set is larger than the number of available page frames, no algorithm that is not an oracle will give good results, and page faults will be frequent. A program that generates page faults frequently and continuously is said to be thrashing.

Working Set Model

It has been observed that most programs do not reference their address space randomly but that the references tend to cluster on a small number of pages. A memory reference may fetch an instruction, it may fetch data, or it may store data. At any instant in time, t, there exists a set of all the pages used by the k most recent memory references. This has been called the working set w(k,t). Because the k + 1 most references must have used all the pages used by the k most recent references, and possibly others, w(k,t) is a monotonically nondecreasing function of k. The limit of w(k,t) as k becomes large is finite, because the program cannot reference more pages than its address space contains, and few programs will use every single page.

Secondary Storage Management

This topic deals with the management of disks of a computer system.

Introduction to Secondary Storage

The main purpose of a computer system is to execute programs. These programs, together with the data they access, must be in main memory during execution. Ideally, we would want the programs and data to reside in main memory permanently. this is not possible for two reasons:

- Main memory is usually too small to store all needed programs and data permanently.

- Main memory is a volatile storage device that loses its contents when power is turned off or lost.

The main requirement of secondary storage is thus to be able to hold very large numbers of data permanently.

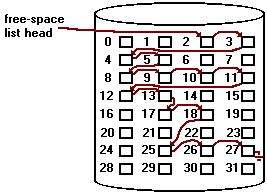

Free Space Management

Since there is only a limited amount of disk space, it is necessary to reuse the space from deleted files for new files. To keep track of free disk space, the system maintains a free-space list. The free-space list records all disk blocks that are free (that is, are not allocated to some file). To create a file, we search the free-space list for the required amount of space, and allocate that space to the new file. This space is then removed from the free-space list. When a file is deleted, its disk space is added to the free-space list. The free-space list, despite its name, might not be implemented as a list, as we shall see.

Bit Vector

Frequently, the free-space list is implemented as a bit map or bit vector. Each block is represented by 1 bit. If the block is free, the bit is 0; if the block is allocated, the bit is 1.

For example, consider a disk where blocks 2,3,4,5,8,9,10,11,12,13,17,18,25,26, and 27 are free, and the rest of the blocks are allocated. The free-space bit map would be

110000110000001110011111100011111...

The main advantage of this approach is that it is relatively simple and efficient to find n consecutive free blocks on the disk. Indeed, many computers supplied bit-manipulation instructions that can effectively be used for that purpose. For example, the Intel 80386 and the Motorola 68020 and 68030 have instructions that return the position of the first bit with the value of 1 in a register. Unfortunately, bit vectors are inefficient unless the entire vector is kept in main memory for most accesses (and is written to disk occasionally for recovery needs). Keeping it in main memory is possible for smaller disks, such as on microcomputers, but not for larger ones.

Linked List

Another approach is to link all the free disk blocks together, keeping a pointer to the first free block. This block contains a pointer to the next free disk block, and so on. In our example, we would keep a pointer to block 2, as the first free block. Block 2 would contain a pointer to block 3, which would point to block 4, and so on.

This scheme is not efficient; to traverse the list, we must read each block, which requires substantial I/O time.

Grouping

A modification of the free-list approach is to store the addresses of n free blocks in the first free block. The first n - 1 of these are actually free. The last one is the disk address of another block containing the addresses of another n free blocks. The importance of this implementation is that the addresses of a large number of free blocks can be found quickly.

Counting

Another approach is to take advantage of the fact that, generally, several contiguous blocks may be allocated or freed simultaneously. Thus, rather than keeping a list of n free disk addresses, we can keep the address of the first free block and the number of n free contiguous blocks that follow the first block. Each entry in the free-space list then consists of a disk address and a count. Although each entry requires more space than would a simple disk address, the overall list will be shorter, as long as the count is generally greater than 1.

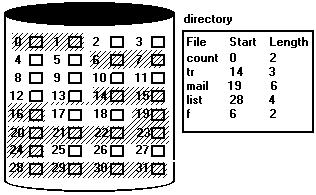

Allocation Methods

The direct access nature of disks allows us flexibility in the implementation of files. In almost every case, many files will be stored on the same disk. The main problem is how to allocate space to these files so that disk space is utilized effectively and files can be accessed quickly. Three major methods of allocating disk space are in wide use: contiguous, linked, and indexed. Each method has its advantages and disadvantages. For the purpose of this discussion, a file may be considered to be a sequence of blocks. All the basic I/O functions operate in terms of blocks. The conversion from logical records to physical blocks is a relatively simple software problem.

Contiguous Allocation

The contiguous allocation method requires each file to occupy a set of contiguous addresses on the disk. Notice that, with this ordering, accessing block b + 1 after block b normally requires no head movement. When head movement is needed(from the last sector of one cylinder to the first sector of the next cylinder), it is only one track. Thus, the number of disk seeks required for accessing contiguously allocated files is minimal, as is seek time when a seek is finally needed.

The difficulty with contiguous allocation is finding space for a new file. Once the implementation of the free-space list is defined, we can decide how to find space for a contiguously allocated file. If the file to be created is n blocks long, we must search the free-space list for n free contiguous blocks. For a bit map, we need to find n 0 bits in a row; for a list of addresses and counts, we need a count of at least n.

The algorithms that are used to allocate disk space to files suffer from external fragmentation. As files are allocated and deleted, the free disk space is broken into little pieces. External fragmentation exists when enough total disk space exists to satisfy a request, but this space is not contiguous; storage is fragmented into a large number of small holes. Depending on the total amount of disk storage and the average file size, external fragmentation may be either a minor or major problem.

Some older microcomputer systems used contiguous allocation on floppy disks. To prevent loss of significant amounts of disk space to external fragmentation, the user had to run a repacking routine that copied the entire file system onto another floppy disk or onto a tape. The original floppy was then completely freed, creating one large contiguous free hole. The routine then copied the files back onto the floppy by allocating contiguous space from this one large hole. This scheme effectively compacted all free space into one hole, solving the fragmentation problem. The cost of this compaction is time. Copying all the files from a disk to compact space may take hours and may be necessary on a weekly basis.

There are other problems with contiguous allocation. A major problem is determining how much space is needed for a file. When the file is created, the total amount of space it will need must be found and allocated. How does the creator(program or person) know the size the file to be created? In some cases, this determination may be fairly simple(copying an existing file, for example), but in general, the size of an output file may be difficult to estimate.

If we allocate too little space to a file, we may find that that file cannot be extended. Especially with a best-fit allocation strategy, the space on both sides of the file may be in use. We simply cannot make the file larger. Two possibilities then exist. First, the user program can be terminated, with an appropriate error message. The user must then allocate more space and run the program again. These repeated runs may be costly. To prevent them, the user will normally overestimate the amount of space needed, resulting in considerable wasted space.

The other possibility is to find a larger hole, to copy the contents of the file to the new space, and to release the previous space. This series of actions may be repeated, as long as space exists, although it can also be time-consuming. Notice, however, that in this case the user never needs to be informed explicitly about what is happening; the system continues despite the problem, albeit more and more slowly.

Even if the total amount of space needed for a file is known in advance, preallocation may be inefficient. A file that grows slowly over a long period, must be allocated enough space for its final size, even though much of that space may be unused for a long period of time.

Linked Allocation

The problems discussed with contiguous allocation can be traced directly to the requirement that space be allocated contiguously. Linked allocation solves these problems. With linked allocation, each file is a linked list of disk blocks; the disk blocks may be scattered anywhere on the disk. The directory contains a pointer to the first and last blocks of the file. For example, a file of five blocks might start at block 9, continue at block 16, then block 1, block 10, and finally, block 25(see below).

Each block contains a pointer to the next block. These pointers are not made available to the user. Thus, if each sector is 512 words, and a disk address(the pointer) requires two words, then the user sees blocks of 510 words.

Creating a file is easy. We simply create a new entry in the device directory. With linked allocation, each directory entry has a pointer to the first disk block of the file. This pointer is initialized to nil(the end-of-the-list pointer value) to signify an empty file. The size field is also set to zero. A write to a file removes the first free block from the free-space list, and writes to that block. This new block is then linked to the end of the file. To read a file, we simply read blocks by following the pointers from block to block.

There is no external fragmentation with linked allocation. Any free block on the free-space list can be used to satisfy a request, since all blocks are linked together. Notice also, that there is no need to declare the size of the file when that file is created. A file can continue to grow as long as there are free blocks. Consequently, it is never necessary to compact free disk space.

Linked allocation does have its disadvantages, however. The major problem is that it can be used effectively for only sequential access files. To find a block of the file, we must start at the beginning of that file and follow the pointers till we get to the ith block. Each access to a pointer requires a disk read. Hence, it is inefficient to support a direct-access capability for linked allocation files.

Another disadvantage is reliability. Since the files are linked together by pointers that are scattered all over the disk, consider what would happen if a pointer were lost or damaged. A bug in the operating system software or a disk hardware failure might result in picking up the wrong pointer. This error could result in linking into the free-space list or into another file. One partial solution is to use doubly linked lists or to store the file name and relative block number in each block; these schemes require even more overhead for each file.

An important variation on the linked allocation method is the use of a file allocation table(FAT). A section of disk that contains a table is set aside. The table has one entry for each disk block, and is indexed by block number. The table is used much as is a linked list. The directory contains the block number of the first block of the file. The table entry indexed by that block number then contains the block number of the next block in the file. This chain continues until the last block, which has a special end-of-file value. Allocating a new block to a file is a simple matter of finding the first zero-valued table entry, and replacing the previous end-of-file value of the file's table entry with the address of the new block. The zero of this new block is then replaced with the end-of-file value. This simple but efficient method is used by the DOS and OS/2 operating systems.

Indexed Allocation

Linked allocation solves the external-fragmentation problem and size-declaration problems of contiguous allocation. However, linked allocation cannot support direct access, since the blocks are scattered all over the disk. Equally detrimental, the pointers to the blocks are scattered all over the disk. Indexed allocation solves this problem by bringing all the pointers together into one location: the index block.

Each file has its own index block, which is an array of disk-block addresses. The ith entry in the index block points to the ith block of the file. The directory contains the address of the index block, see below. To read the ith block, we use a pointer in the ith index-block entry to find and read the desired block.

When the file is created, all pointers in the index block are set to nil. When the ith block is first written, a block is removed from the free-space list, and its address is put in the ith index-block entry.

Indexed allocation supports direct-access, without suffering from external fragmentation. Any free block anywhere on the disk may satisfy a request for more space.

Indexed allocation does suffer from wasted space. The pointer overhead of the index blocks is generally greater than the pointer overhead of linked allocation. Most files are small. Assume that we have a file of only one or two blocks. With linked allocation, we lose the space of only one pointer per block (one or two pointers). With indexed allocation, an entire index block must be allocated, even if only one or two pointers will be non-nil

This point raises the question of how large the index block should be. Every file must have an index block, so we want the index block to be as small as possible. If the index block is too small, however, it will not be able to hold enough pointers for a large file, and a mechanism will have to be available to deal with this issue:

* Linked scheme. An index block is normally one disk block. Thus, it can be read and written directly by itself. To allow for large files, we may link together several index blocks. For example, an index block might contain a small header giving the name of the file, and a set of the first 100 disk-block addresses. The next address(the last word in the index block) is nil(for a small file), or is a pointer to another index block(for a large file). * Multilevel index. A variant of the linked representation is to use a separate index block to point to the index blocks, which point to the file blocks themselves. To access a block, the operating system uses the first level index block to find the second-level index to find the desired data block. This approach could be continued to a third or fourth level, but two levels of indexes are generally sufficient. If we can get 512 pointers into an index block, then two levels of indexes allows 262,144 data blocks, which (at 1024 bytes each) allows a file of up to 268,435,456 bytes - a size that exceeds the physical capacity of many devices. * Combined scheme. Another alternative, used in the BSD UNIX system, is to keep the first, say 15 pointers of the index block in the device directory. The first 12 of these pointers point to direct blocks; that is, they directly contain addresses of blocks that contain data of the file. Thus, the data for small(no more than 12 blocks) files do not need a separate index block. If the block size is 4096 bytes, then up to 48KB of data may be accessed directly. The next three pointers point to indirect blocks. The first indirect-block pointer is the address of a single indirect block The single indirect-block is an index blockm containing not data, but rather the addresses of the blocks that contain pointers to the actual data blocks. The last pointer would contain the address of a triple indirect block.